Our weekly Briefing is free, but you should upgrade to access all of our reporting, resources, and a monthly workshop.

This week on Indicator

Craig published a deep dive guide on how to search for information online in the age of AI—with help from 11 experts who do this for a living. There are dedicated sections on AI, image search, and social search. Plus four key strategies for making search work now.

Alexios ran a new audit and found that at least 4,431 ads for AI nudifiers have run on Meta since December 4. Apple and Google hosted several of the offending apps, and some of nudifiers used mainstream platforms like Amazon, PayPal, and Stripe to process payments.

FYI our monthly members-only workshop is next Wednesday, Jan. 28 at 12 pm ET. Craig will showcase some of the techniques he used in his recent investigation into an affiliate scam on TikTok. A former threat investigator for Meta and Google will also talk about how an LLM can assist with this type of investigation! Upgrade now and join us!

Deception in the News

📍 YouTube head Neal Mohan listed managing AI slop as one of his priorities for 2026 that will help “supercharge and safeguard creativity” on the platform. Mohan wrote that YouTube will build on its “established systems that have been very successful in combatting spam and clickbait, and reducing the spread of low quality, repetitive content.”

📍 TikTok is still running deepfakes that mock the death of Renée Good that. This comes 10 days after the platform took down several similar videos that were flagged by Indicator. Another video with 666K views shows an AI avatar of news anchor José Díaz-Balart falsely reporting that Gold was having an affair with the ICE officer who killed her. It includes deepfake of the two kissing. (We previously reported about TikTok’s struggles with fake news accounts that impersonate journalists and major news organizations.)

📍 The British police watchdog is investigating the disgraced head of the West Midlands force following a botched ban on fans of the Israeli football team Maccabi Tel Aviv. The decision had relied on intelligence that included a made-up reference to a Maccabi game against West Ham that was hallucinated by Microsoft Copilot.

📍 The Midas Project reports that a dozen right-wing influencers on X “suddenly began posting extremely similar criticisms of the AI OVERWATCH Act.”

📍 Google’s AI Overviews struggled to get the current year correct, insisting in some queries that next year is not 2027.

📍 Politico reported that a group of 57 Members of the European Parliament have proposed a ban on AI nudifiers under the AI Act.

Tools & Tips

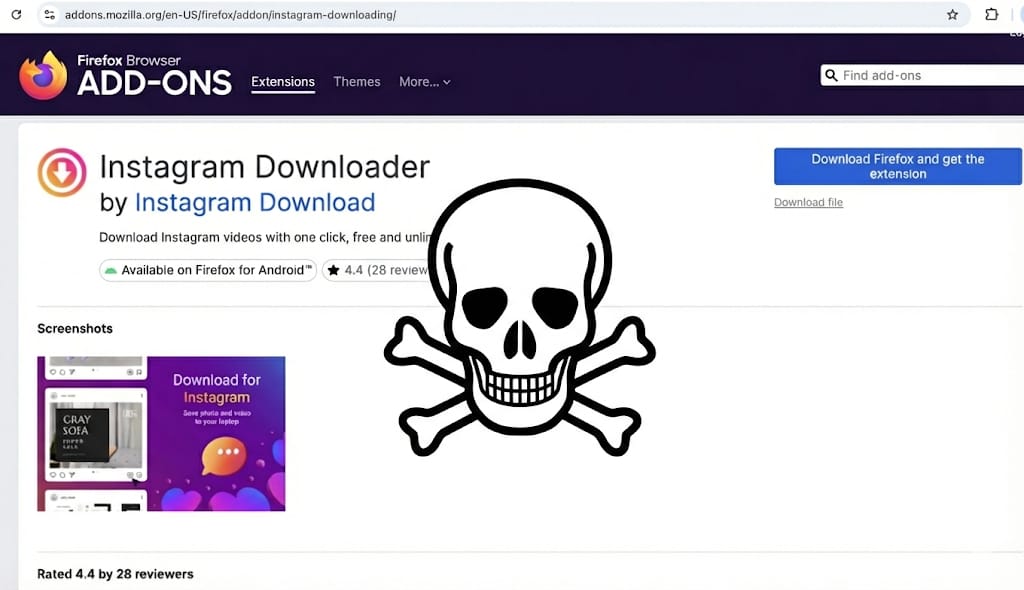

There are many useful OSINT browser extensions, but you need to be very careful about what you install.

Researchers at Koi Security and LayerX identified more than 30 malicious Chrome, Firefox, and Edge extensions that masqueraded as useful utilities.

The extensions promised functionality like downloading Instagram and YouTube videos, taking full page screenshots, and quickly translating text. Behind the scenes, they loaded code that “tracks the victim’s browsing activity, hijacks affiliate links on major e-commerce platforms, and injects invisible iframes for ad fraud and click fraud.”

It’s a good reminder to research extensions before you install them.

When it comes to downloading videos, the best option is to install yt-dlp. I run it from the Terminal on my Mac and it can easily grab videos from a wide range of sites.

Tip: make sure to regularly update to the latest version. And if you’re not technically inclined, just ask your chatbot of choice about how to install it. — Craig

📍 The Data and Research Center wrote a detailed case study of how they extracted structured information “from roughly 100,000 photos, many of them duplicates, without looking at every single one” to support the reporting of the Damascus Dossier by Norddeutscher Rundfunk (NDR) and the International Consortium of Investigative Journalists (ICIJ). The project documented the killing machine that operated under Syria's former president Bashar Al-Assad.

An excerpt:

We came up with a three-stage processing pipeline. In the first stage, we extracted EXIF metadata from the photos and analyzed the folder structures they came from. The second stage used computer vision to detect and isolate the evidence labels within the images, and the third stage applied text recognition (or OCR) to read the information from those labels.

📍 Tony Marshall is moving his huge list of OSINT resources from GitHub to a new domain, the-osint-toolbox.com. Worth bookmarking the new site as the migration gets underway.

📍 Derek Bowler wrote, “The SIFT method: A step-by-step guide to investigative verification.”

The UK OSINT Community published a video walkthrough of solutions for week 1 of its CTF challenge. It “breaks down how to approach an image-based challenge methodically, identify useful visual indicators, and apply practical analysis techniques to extract meaningful intelligence from a single image.”

Events & Learning

📍 Partner event: OsintifyCON is a virtual conference dedicated to Actionable Open Source Intelligence and designed for investigators, analysts, journalists, and intelligence professionals. The conference is on Feb 5 and the training is on the 6. Indicator has partnered with OsintifyCON to offer our readers discounted tickets. Go here to get 30% off an early bird conference ticket, and here to get 30% off the early bird price for a full day of training. The discount ends soon!

📍 OSINT Industries is hosting a free webinar on Jan. 28, “OSINT Investigation: Tracking Fentanyl Precursor Manufacturing in China.” Register here.

Reports & Research

📍 The Bureau for Investigative Journalism reported that a PR firm founded by the current communications chief for the British Prime Minister “polished” the Wikipedia pages of its clients for almost a decade. Beneficiaries of the “wikilaundering” service, which broke the encyclopedia’s ‘s rules, reportedly included the Qatari government and the Gates Foundation.

📍 The Center for Countering Digital Hate found almost 13,000 sexualized images in a random sample of 20,000 images generated by Grok between December 31 and January 8. If the proportion holds across all images generated by Grok during that time period, the total number of sexualized deepfakes created by Elon Musk’s chatbot would amount to 3 million. A separate analysis by The New York Times estimated the number to be 1.8 million. It’s worth noting, as we wrote two weeks ago, that not all of the images would have been nonconsensual in nature. But even 10% of the estimate(s) would be massive.

📍 NewsGuard stripped the watermarks from 20 videos created with Sora and asked ChatGPT, Gemini, and Grok whether they were AI-generated. The LLMs mostly failed to answer correctly.

Want more studies on digital deception? Paid subscribers get access to our Academic Library with 55 categorized and summarized studies:

One More Thing

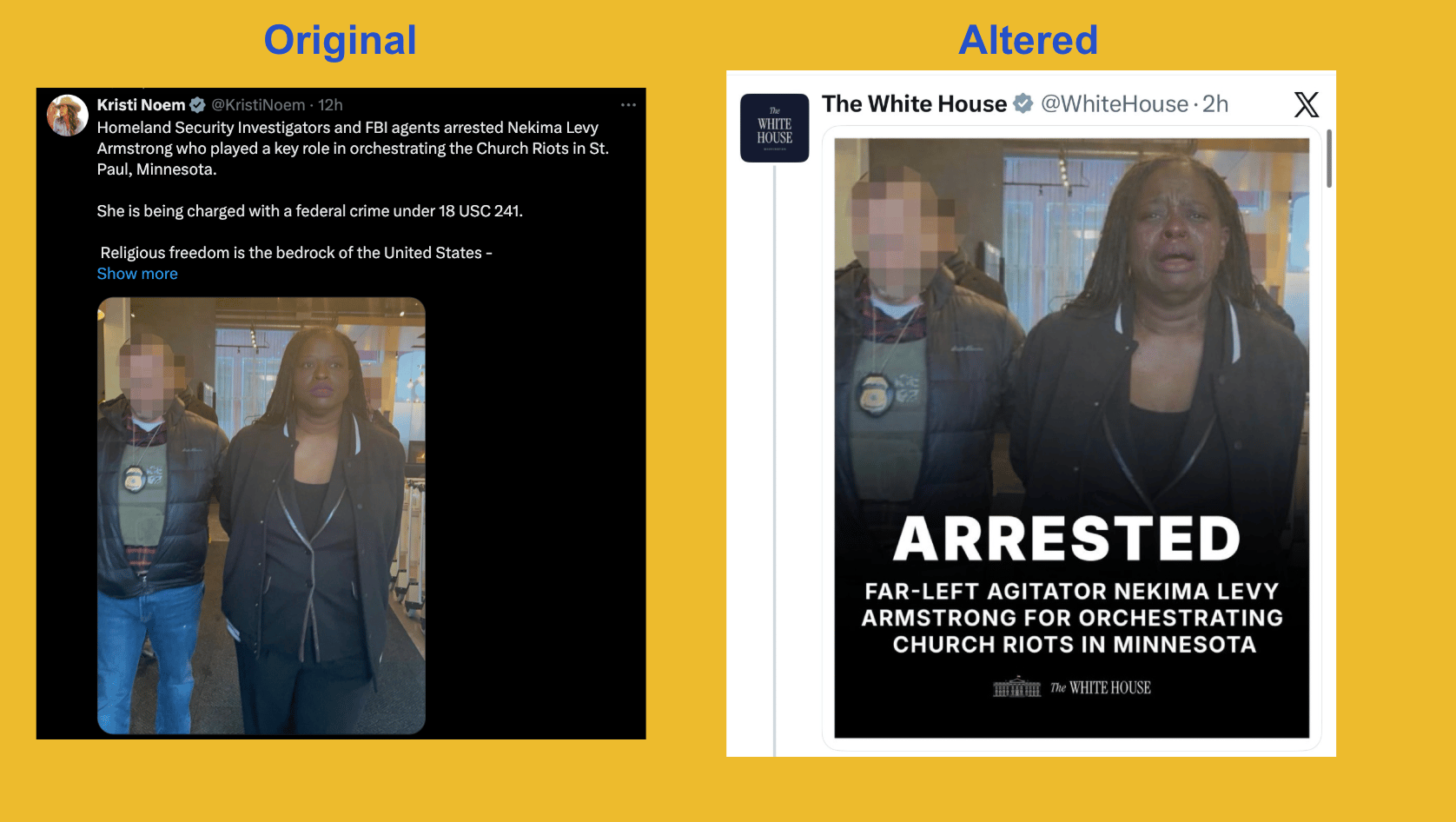

In a new and disturbing first, the White House apparently used AI to alter a photo of Nekima Levy Armstrong, a civil rights lawyer that was arrested after she interrupted a church service in St. Paul, Minn.

Armstrong was charged with conspiracy to deprive rights, a federal felony, after she and two others protested a pastor’s apparent work with Immigration and Customs Enforcement.

In response to questions and concerns about the image, White House deputy communications director Kaelan Dorr posted on X: "YET AGAIN to the people who feel the need to reflexively defend perpetrators of heinous crimes in our country I share with you this message: Enforcement of the law will continue. The memes will continue. Thank you for your attention to this matter."

Indicator is a reader-funded publication.

Please upgrade to access to all of our content, including our how-to guides and Academic Library, and to our live monthly workshops.