Hey there!

Indicator is a tiny operation: just Craig and I. Paid memberships keep us going.

We have a 20% discount that expires tonight; consider upgrading now to support our work and get discounted access to all of our reporting and training.

Either way, thanks for being a subscriber and caring about the health of our online information ecosystem.

— Alexios

Meta ran more than 25,000 ads for AI nudifiers in 2025.

Despite promising to crack down on such ads in June of last year, the company has failed to stop the flow, according to the latest audit by Indicator.

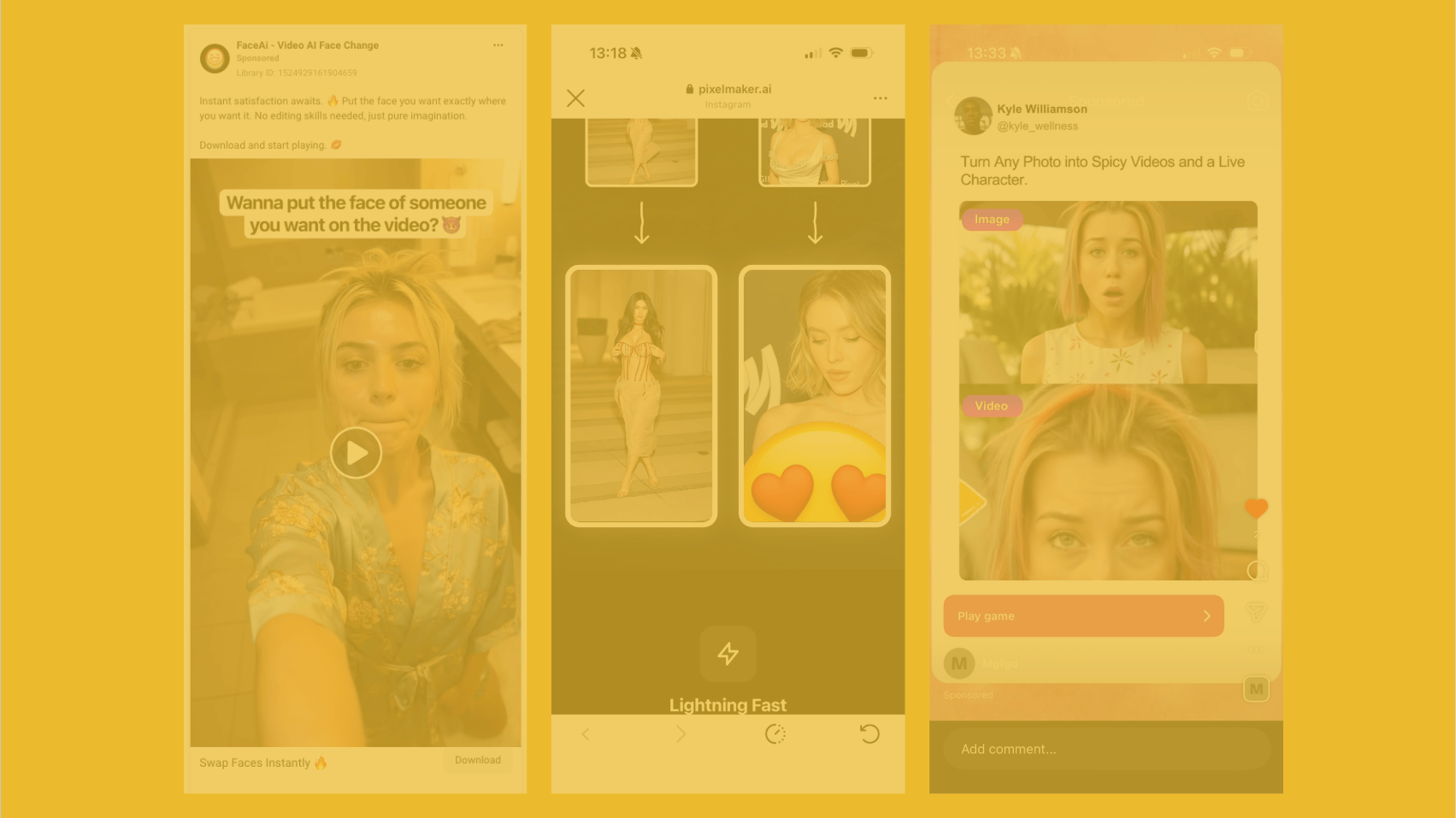

I identified at least 4,431 nudifier ads that have appeared across Meta’s platforms since December 4. The ads promise to turn any photo into a pornographic video and point to 13 different websites and 6 apps. But in an interesting twist, some ads appear to send users to services that fail to deliver the promised nudes, suggesting that scammers have jumped on the nudifier trend. (Go here to read all of Indicator’s coverage of AI nudifier apps.)

One of the apps advertised, Swap Faces in Videos - FaceAi, had more than 100,000 downloads on the Google Play store. A recurrent ad among the hundreds it ran on Meta is a video where Sydney Sweeney’s face is swapped onto a woman that is about to perform oral sex. (Nearly identical ads previously targeted Emma Watson.) The app was rated “E” for everyone by Google.

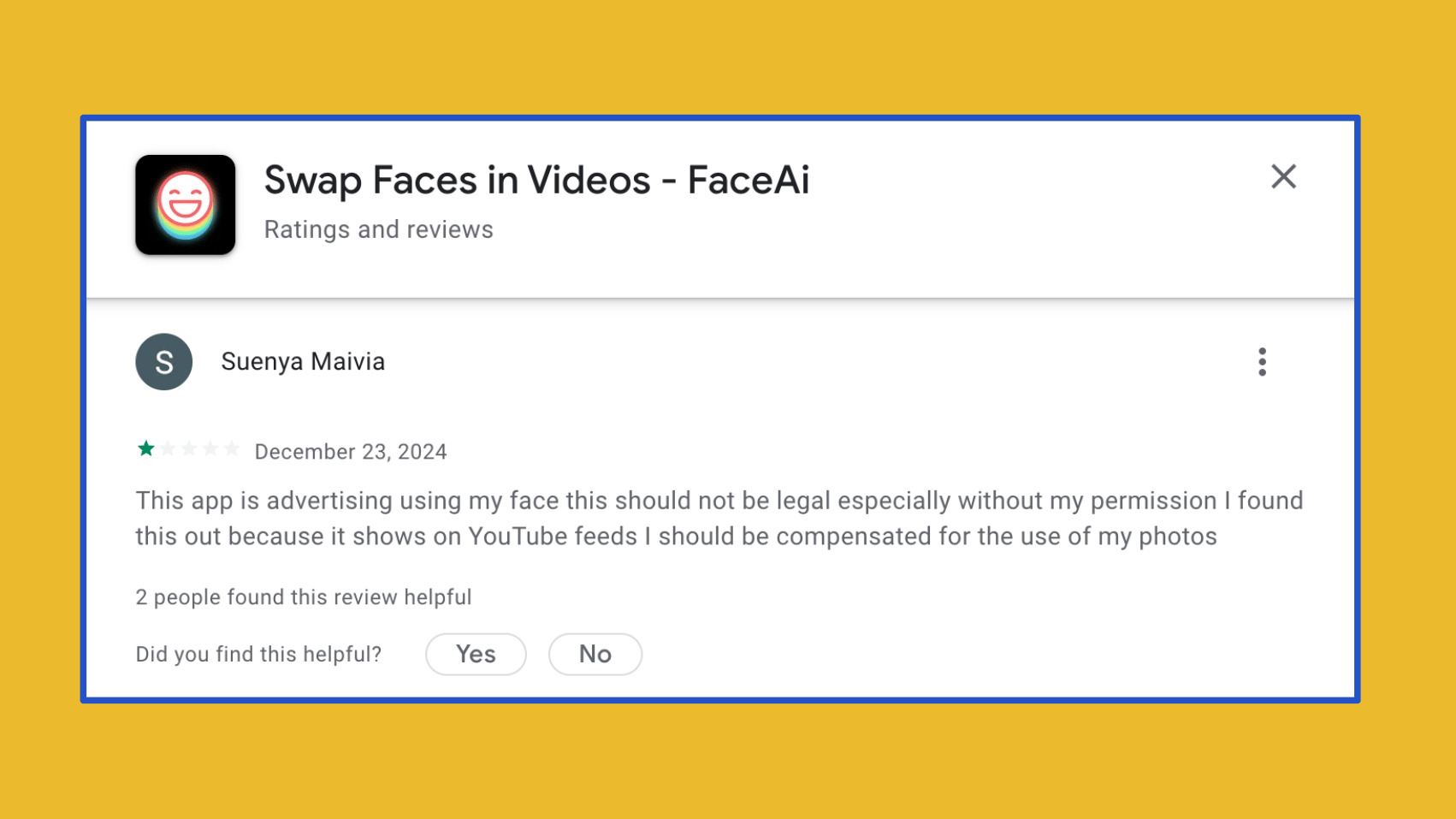

One user review even claims that the app used their likeness in YouTube ads:

Google deleted Swap Faces and two other apps we identified from the Play store following our outreach. A spokesperson said that they violated Play’s sexual content policy. Apple did not respond to our request for comment on three related apps available on its own store.

The nudifier ads identified by Indicator typically promote smaller websites and apps that have tens of thousands of visits per month or a similar number of app downloads. The most popular services in this abusive category often rely on referrals from adult websites and content creators to drive user acquisition, rather than ads on social media. They also appear to have a reliable stream of repeat customers.

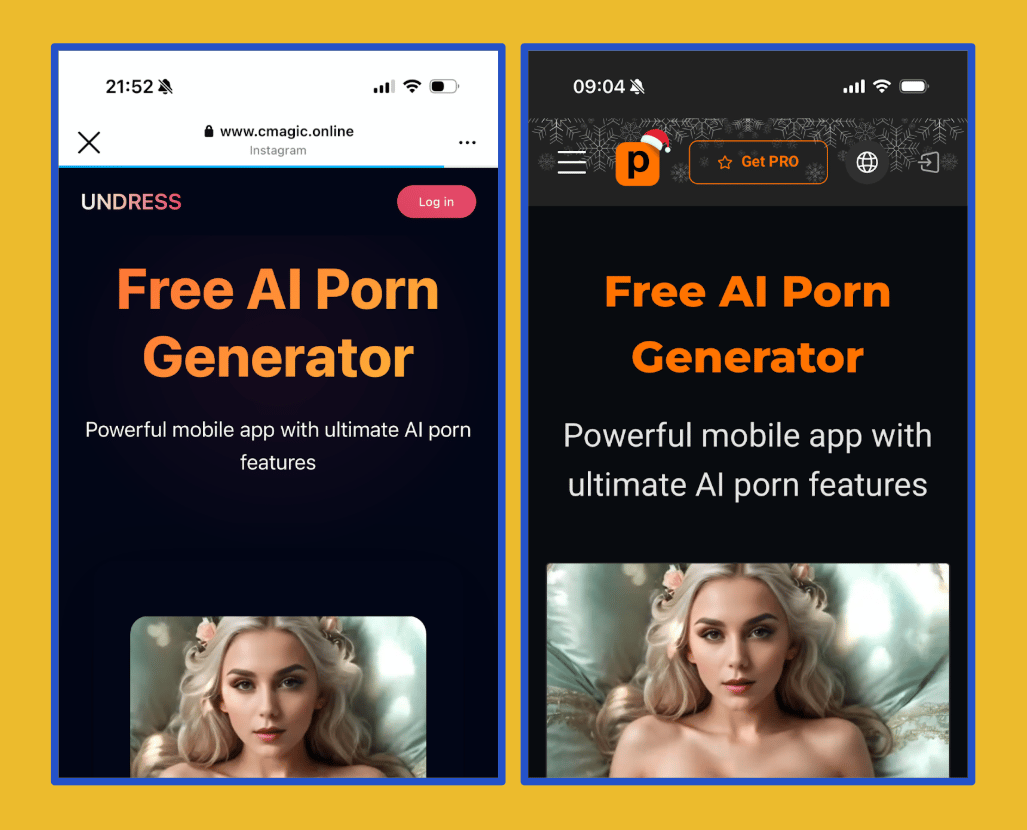

One of the nudifiers in our sample seems to be trying to leverage this popularity by impersonating pornworks[.]com, a large nudifier and AI porn generator (see below). The same website put a big orange P**nworks logo on some of its ads on Meta.

Spoof websites of big brands like major news outlets or e-commerce sites are common. The fact that impersonators are starting to crop up in the AI nudifier space suggests that some of the services are big enough to be recognizable by users. According to Similarweb, Pornworks got almost 10 million visits in December 2025.

The nudifiers in our sample are operated by an overlapping group of businesses with little online presence (or at most, AI-generated corporate websites with unclickable menus).

Join Indicator to read the rest

“I’m a bit more at peace knowing there are people out there doing some really heavy lifting here - investigating, exposing, and helping us understand what’s happening in the wild. And the rate & depth at which they're doing it..." — Cassie Coccaro, head of communications, Thorn

Upgrade nowJoin Indicator to get access to:

- All of our reporting

- All of our guides

- Our monthly workshops