In 2023, girls around the world started finding out that their male classmates were using AI to convert their photos into nudes against their will. This happened in Australia, Brazil, Canada, Greece, Italy, Singapore, South Korea, the UK, and the US, among other places.

It didn’t come out of nowhere. Prominent women had been victimized for several years prior, with their likeness “face-swapped” into pornographic videos and posted on platforms like MrDeepfakes. One victim was disinformation researcher Nina Jankowicz, who wrote that the “deeper purpose” of nonconsensual AI nudes is darker than sexual gratification. The goal is to “humiliate, shame, and objectify women, especially women who have the temerity to speak out.”

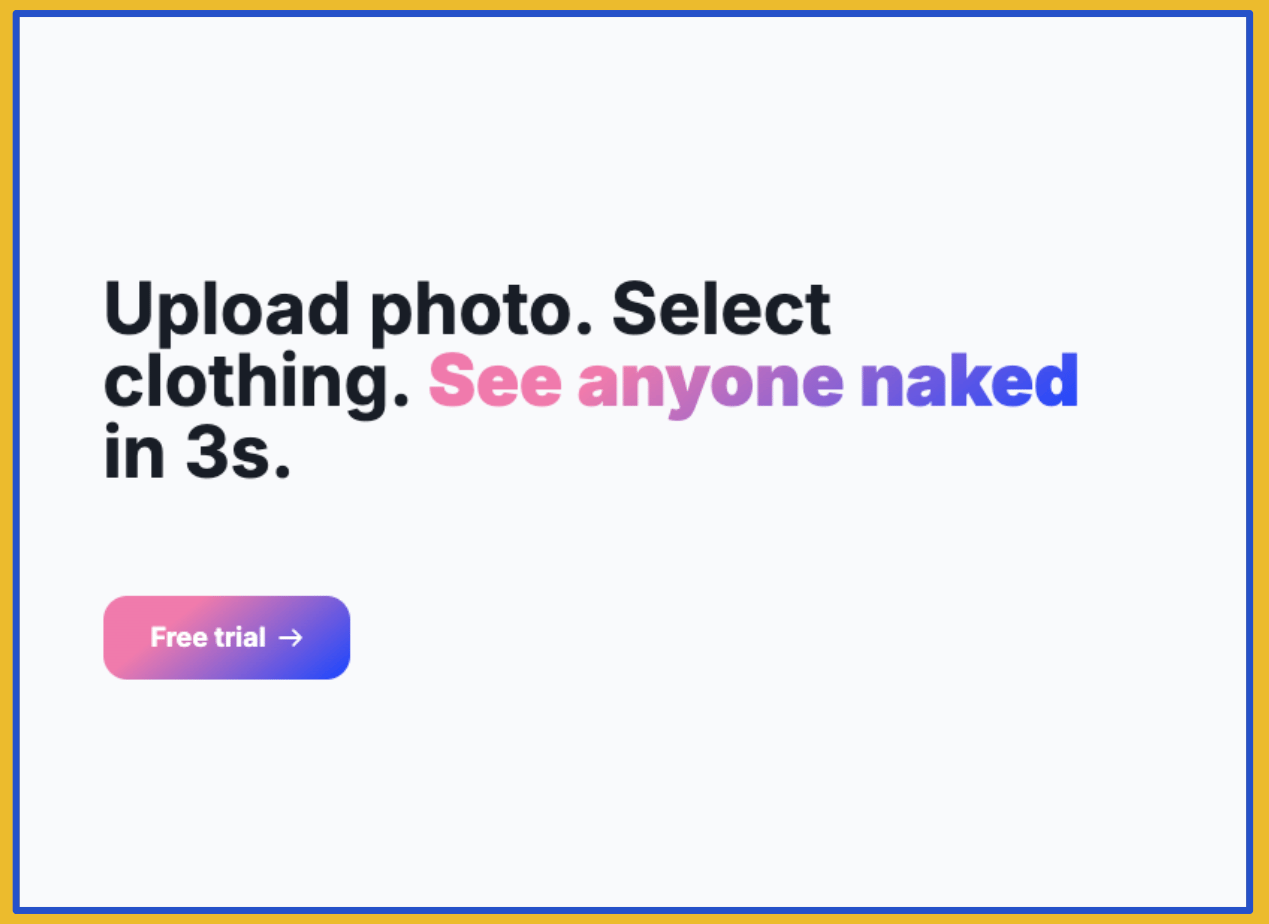

What changed in 2023 was that general purpose AI models made it easy for any person to target any woman. You didn’t need technical skills or extensive footage of the victim. An internet connection and a single photo was enough.

Two years after those early school cases, these “AI nudifiers” are as entrenched as ever. While there has been some progress this year in limiting the dissemination of deepfake nudes – notably, the shuttering of MrDeepFakes – it’s as easy as ever to generate synthetic nonconsensual intimate imagery (SYNCII).

Take Clothoff, one of the nudifiers most frequently cited in public reporting. In October 2025, its main domain got 1.98 million visits. That’s more than twice its monthly average from December 2024 to May 2025. Clothoff isn’t alone. Ten of the top AI nudifying websites we track1 received more than 10 million visits in October 2025, a 4% increase from six months ago.

Traffic is steady in part because many tech platforms and companies continue to provide services to these websites, often making money in the process.

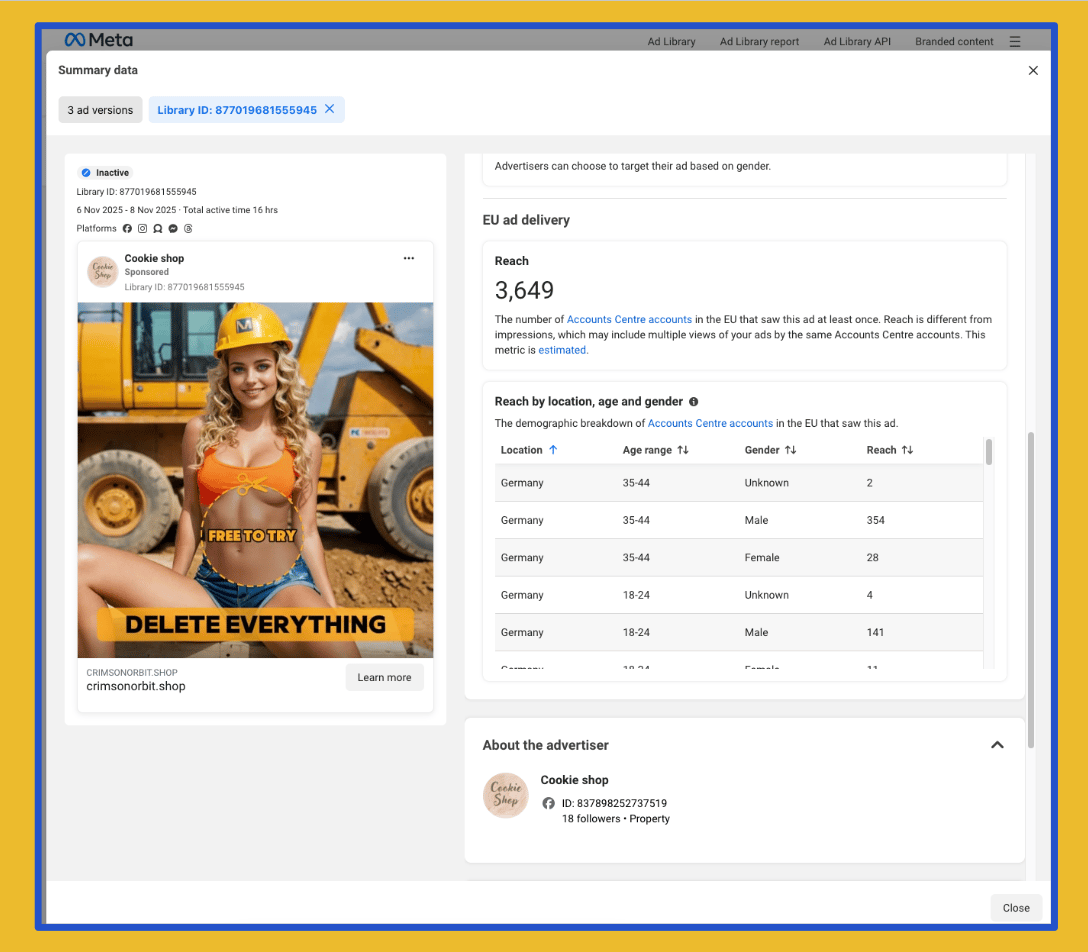

Since September 10, Meta ran at least 9,156 ads for 11 different nudifying tools on Facebook and Instagram.2 This brings the total number of ads for AI nudifiers that Indicator has uncovered this year on Meta to more than 25,000.

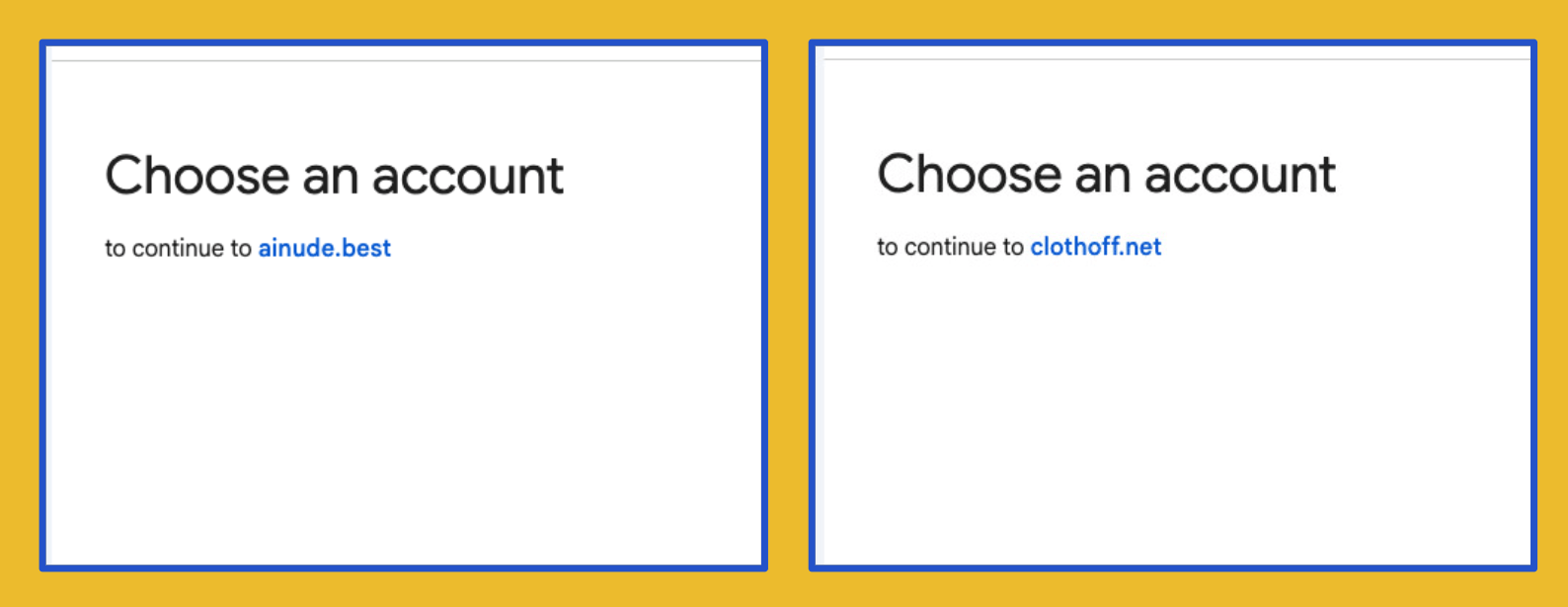

These tools often have apps on the Apple3 and Google stores. X is taking money from one popular nudifier in exchange for a blue badge. And several other companies provide core infrastructure to make these sites operate. According to data on Builtwith, five of the top nudifiers in our sample use Cloudflare for a range of services; on six out of 10 you can log on via your Google account.

Frankly, I’m sick and tired of writing about these awful websites. But these harmful tools reach millions and make millions, so it’s paramount to keep our attention on them.

For this article, I spoke to 11 experts from academia, civil society, government, and the private sector to determine what it would take to significantly curtail the reach of AI nudifiers. I came out of it with uncharacteristic optimism that the concerted effort of people working on this problem may start yielding results in 2026. But it’s going to take work.

Rebecca Portnoff, Vice President of Data Science & AI at the nonprofit Thorn, gave me a useful way to categorize the countermeasures. Roughly speaking, making a dent in the problem will require deplatforming the nudifiers, debilitating their technology, and deterring their users. Let’s look at each of these three interventions in order.

Upgrade to read the rest

Become a paying member of Indicator to access all of our content and our monthly members-only workshop. Support independent media while building your skills.

UpgradeA membership gets you:

- Everything we publish, plus archival content, including the Academic Library

- Detailed resources like, "The Indicator Guide to connecting websites together using OSINT tools and methods"

- Live monthly workshops and access to all recordings and transcripts