Hey folks, today’s news warrants a bonus newsletter.

I have spent more than a year covering the AI nudifier network Crush AI. My work exposing this operation led Meta to take down tens of thousands of ads and has been quoted in letters to tech CEOs by US Senator Dick Durbin and Representative Debbie Dingell.

Today, Meta is stepping up the pressure on Crush AI. This decision shows that the work done by Indicator and others1 to publicly document online harms can drive substantial platform action.

If you join us as a paying member, we can keep pushing platforms to do better.

Meta announced today that it is suing Joy Timeline HK Limited, the Hong Kong-based company behind the Crush AI network of AI nudifiers.

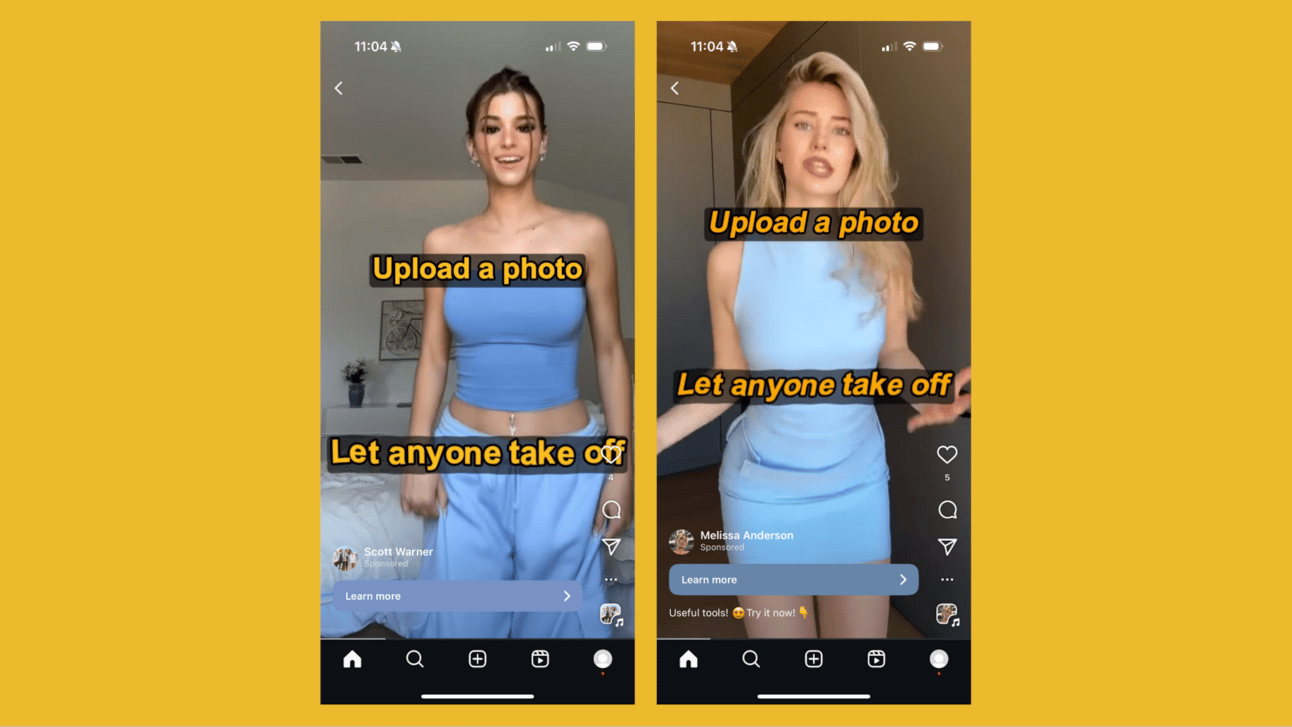

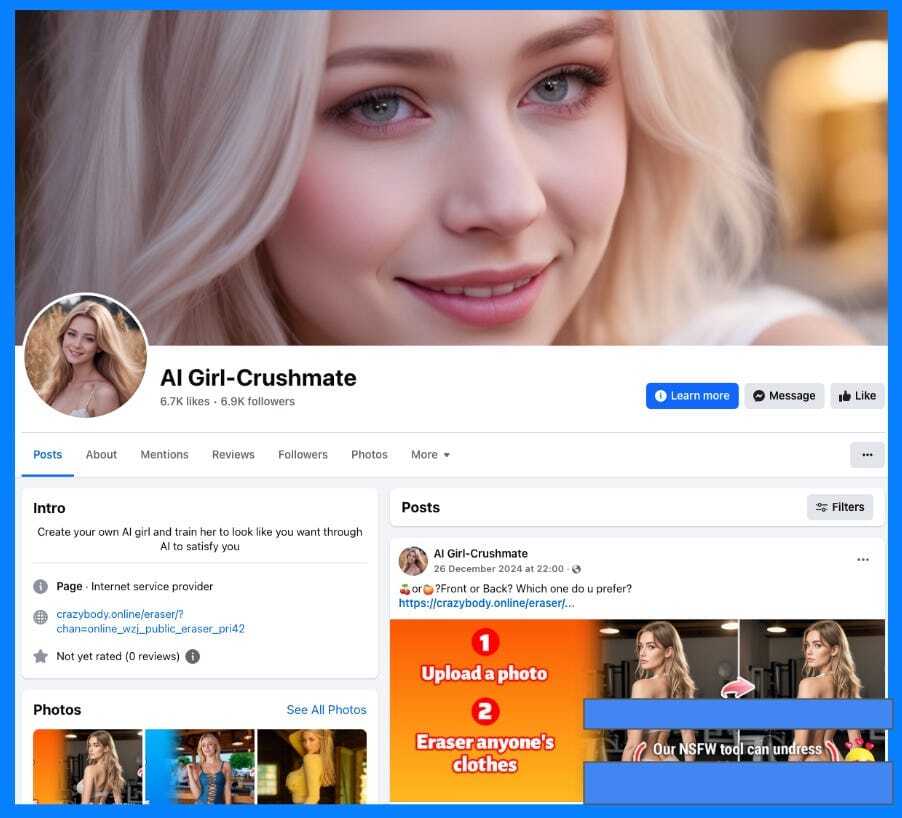

Crush AI’s websites can transform a single photo of a real person into a synthetic video of them undressing, or depict them in a range of sex poses. Over the past year, the network has been able to place thousands of ads on Meta products.

Meta says its lawsuit aims “to prevent [Joy Timeline] from advertising CrushAI apps on Meta platforms” and “follows multiple attempts by Joy Timeline HK Limited to circumvent Meta’s ad review process and continue placing these ads, after they were repeatedly removed for breaking our rules.”

The company also announced it will deploy new tools to detect ads for AI nudifiers and that it will share more information about the actors behind them with other tech platforms through the Lantern project.

The continued presence of nudifier ads shows the relative sophistication of Joy Timeline and other enablers of AI abuse. Over the past year, I have seen Crush AI circumvent Meta’s moderation by creating dozens of different domains, hundreds of different Meta advertiser accounts, and thousands of individual ads.

Nudifier ads often avoid featuring full nudity or include a few nude frames within a longer video. This makes classifiers built to flag sexual content less effective at detecting this type of material.

Meta says it has developed a custom classifier to identify nudifier ads even when they don’t contain nudity. It is also promising quicker action on ads that are similar to those it previously removed flagged. Here’s how the company describes it:

We’ve worked with external experts and our own specialist teams to expand the list of safety-related terms, phrases and emojis that our systems are trained to detect within these ads. We’ve also applied the tactics we use to disrupt networks of coordinated inauthentic activity to find and remove networks of accounts operating these ads. Since the start of the year, our expert teams have run in-depth investigations to expose and disrupt four separate networks of accounts that were attempting to run ads promoting these services.

This is a welcome, if overdue, step. It remains to be seen how effective the new measures will be. While writing this post on Wednesday, I was able to quickly find 13 active ads on Meta for a Crush AI website. I also found more than 100 ads for other nudifiers.2 Several featured a video of influencer Brooke Monk being nudified in order to showcase their product.

Meta removed the ads and related accounts I shared with them. The company said it will continue to monitor the situation and reserved the right to take legal action against other repeat offenders.

Indicator will most assuredly continue monitoring as well.

Read our full coverage of the Crush AI network below and consider becoming a paying subscriber to support our work exposing this abuse vector:

1 I am thinking especially of the folks at 404 Media, who have covered this beat with the urgency it deserves and led to significant changes being made at Civitai and beyond. I’m particularly grateful to Emanuel Maiberg for amplifying my early findings and being the reason legislators came across it. Emanuel, you are a class act.

2 Meta took them down shortly after I reported them to the company but I’m sharing the links for posterity: 48 active ads for intellectubot[.]art and 35 active ads for each of loveone[.]pal, lovepal[.]wiki. loveone[.]online and loveone[.]cdf.