This week on Indicator

Craig found over 3,000 Meta ads for nudifiers and AI girlfriend apps that featured bare breasts, simulated sex acts, and other explicit content. This is in addition to the more than 4,000 nudifier ads we reported on a few weeks ago.

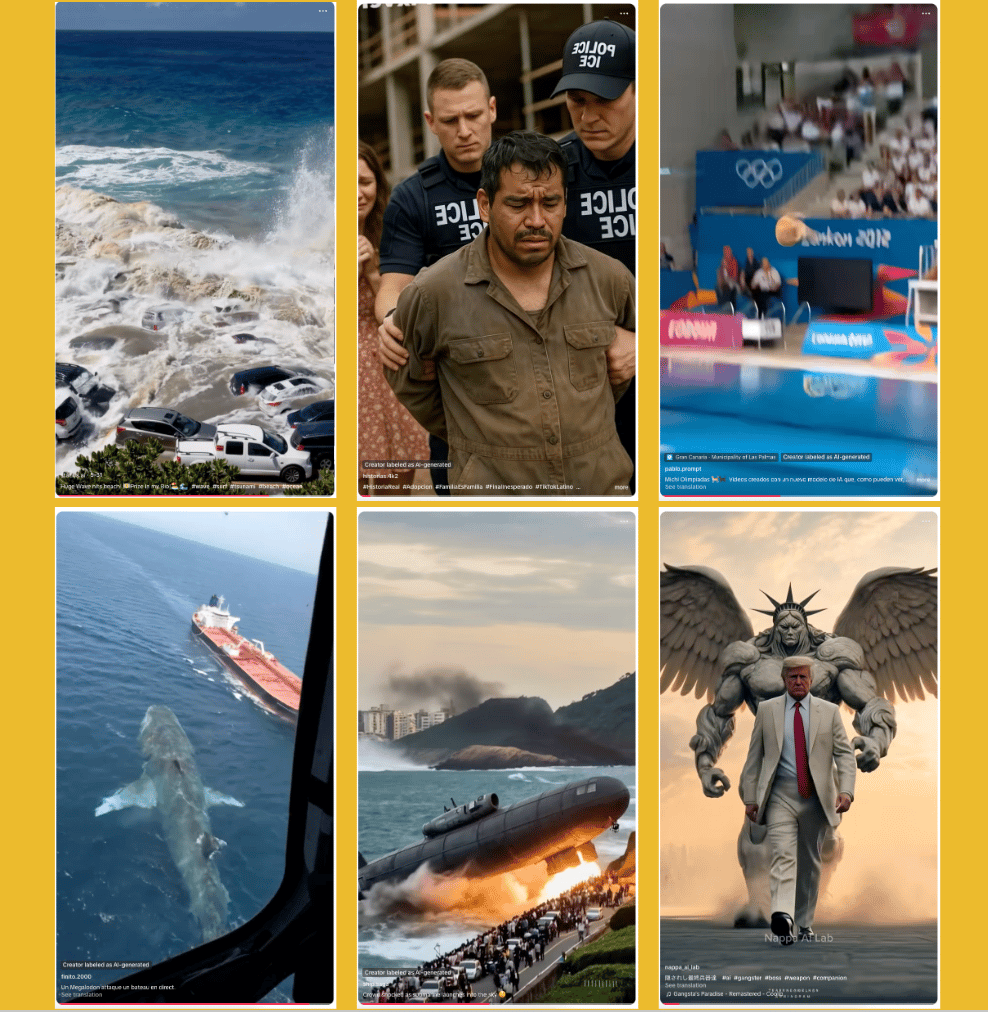

Alexios published the Indicator Guide to AI labels, an overview of how and when platforms are flagging AI-generated content on their service. We expect to update this guide frequently.

OpenAI launches synthetic social network

This week OpenAI launched Sora 2, a fully synthetic social network.

The company wrote that “concerns about doomscrolling, addiction, isolation, and RL-sloptimized feeds are top of mind” and promised to be responsibleTM about its own RL-sloptimized feed.

Users can now choose between scrolling for AI slop on their regular social networks or opt for a slop-only menu that includes Sora 2 and Meta’s Vibes.

Along with its eerily smooth motion generation and accompanying audio, Sora 2’s innovation is that it quickly enables users to create convincing AI avatars of themselves that can be deployed in all kinds of synthetic scenarios. After reading three numbers and tilting my head up and left, I could place a credible Alexios deepfake in all kinds of scenarios.

I first thought about all of the fabricated eyewitness accounts that this will allow for. So I deployed my AI replica to report on a fake fire at the UN and a fake hurricane in Florida. Video fidelity appears to be higher than audio, with Sora at one point giving me a British accent. As with all generative AI, it can also get basic things pathetically wrong.

You can also make deepfakes of other people, depending on their permissions, which is how people reimagined Sam Altman as an unhinged conspiracy theorist, a petty thief, or a Pokemon shepherd. Sora didn’t let me create videos of Giorgia Meloni, Donald Trump, or Jannik Sinner. But it does allow you to generate celebrities and historical figures. Which is how we get AI slop of Martin Luther King Jr. and JFK, as well as Fred Rogers or the recently deceased comedian Norm Macdonald.

You can also use Sora for your run-of-the-mill fake historical footage and made-up geopolitical events.

OpenAI adds a bouncing watermark to Sora videos but this can be easily cropped out, as I did in the fake fire video that I (briefly) uploaded to Instagram, LinkedIn, and YouTube. None of the platforms labeled the video as AI-generated.

The best case scenario is that Sora 2 remains a receptacle for highly personalized AI slop, a place where users can ogle at imagined performances of themselves all day. The risk is that it further normalizes a blended unreality where anyone can be deepfaked without their consent to a mass audience.

As Sam Gregory of Witness put it: “there's a chilling casualness, and sense of their own playful discovery at the expense of others, to the way OpenAI has released Sora2 - enabling easy appropriation of likenesses and simulation of real-life events.”

And just as OpenAI rolled back protections on photorealism in ChatGPT, I would not be surprised if it eventually eliminates Sora 2’s limitations on deepfaking other people. — Alexios

Deception in the News

📍 Grok flipped the findings of a Lead Stories fact check on a suspected Michigan shooter.

📍 Donald Trump posted, then deleted, an AI-generated video of his daughter-in-law promoting the nonexistent cure-all “medbed” technology. Anna Merlan reports that even prior to this, “the medbed discourse was extremely active on Truth Social.” That wasn’t even the only deepfake Trump posted this week.

📍 An American marketing company retained by Israel’s Ministry of Foreign Affairs reportedly offered to “develop a bot-based program on various social media channels (e.g. Instagram, TikTok, LinkedIn and YouTube) that ‘floods the zone’ with the Ministry of Foreign Affairs’ pro-Israel message.”

📍 Digi, an Australian lobby group for tech platforms, is considering dropping misinformation from the industry’s code of practice. The group reportedly warned that the concept is “subjective,” “fundamentally linked to people’s beliefs and value systems,” and that there is a risk of “misinformation alarmism.”

📍 Amazon sold AI-generated biographies of Kaleb Horton, a writer who recently died. The author of a real obituary about Horton told 404 Media that “I cannot overstate how disgusting I find this kind of ‘A.I.’ dog shit in the first place, never mind under these circumstances.”

📍 Media Matters found that the filmmaker behind the infamous “Plandemic” conspiracy video is marketing a supplement on TikTok’s ecommerce platform that promises to “bulletproof your immune system.”

📍 The Tech Transparency Project identified “63 scam advertisers who have collectively run more than 150,000 political ads on Meta platforms, spending $49 million.” And the Nieman Journalism Lab wrote about how broadcast journalists around the world are being impersonated in deepfake scam ads.

Tools & Tips

Meta set off a bit of a panic in the OSINT community a few weeks ago by removing the “Posts” filter from Facebook native search.

For a short period, you couldn’t restrict search results to only show posts. The options for profiles, groups, pages etc. remained. But no posts. What the hell.

Paul Myers of the BBC was the first person to alert me to the change. Independent trainer and investigator Henk Van Ess stepped in to remind people that his Who posted what? tool is still a great way to filter and search posts on Facebook. But it was pretty frustrating to think that the posts filter was gone for good.

Well, I have some good news: you can once again filter Facebook search results by posts.

I contacted Meta to ask why they killed the posts filter and to find out if it would come back. The spokesperson said that the company moved the post filter under the “All” tab/menu. The change is now live on desktop and mobile.

Enter your search term(s) in the native search bar and you’ll see options to filter posts here:

It’s a bit confusing because the results page initially defaults to All and shows, well, all types of content, including profiles. But an easy way to restrict it to posts is to select the “Posts from” option and choose “Anyone” or “Public posts.”

Now you only see posts. You can further refine the results by year, among other options. —Craig

📍 i-Intelligence released the 2025 edition of osinthandbook.com. It’s a huge, curated list of tools for different tasks. Use it in conjunction with Bellingcat’s Online Investigation Toolkit.

📍 Osint.moe is an LLM-powered research tool where “people, organizations, websites, locations, and other entities are captured as nodes, and evidence is represented as relationships between them.” (via Cyber Detective)

📍 Petro Cherkasets of OSINT Team published, “How to use stealer logs for OSINT investigations.”

📍 In “Beyond Dashboards: OSINT’s Next Two Decades,” Nico Dekens writes that, “The next two decades will bury lazy investigators under dashboards and AI assistants. The ones who survive will be the ones who treat OSINT like a discipline, not a dopamine hobby.”

Reports & Research

This week saw the release of two interesting reports about studying and measuring disinformation.

Science Feedback and a group of partners published “Measuring the State of Online Disinformation in Europe on Very Large Online Platforms.” It tackled the always difficult to measure question of prevalence. Using exposure-weighted random samples, the team found that TikTok had the highest level of prevalence of mis/disinfo at close to 20%(!). Here’s a comparison chart:

Other key findings were that “low-credibility accounts enjoy a clear interaction advantage over high-credibility accounts” and that “low-credibility actors are largely monetized on YouTube and (to a narrower extent) on Facebook; elsewhere, public data are inadequate.” A summary is here. Full report here.

The other report of note is the EU Disinfo Lab’s comparative analysis of different frameworks for studying and/or measuring the impact of disinformation. The report is here. The organization also updated its framework, the Impact-Risk Index.

📍 The International Center for Journalists published a mixed-methods case study of the “critical role played by the ethnic and Indigenous press in countering disinformation in the U.S.”

📍 Open Measures’ research team dug into the Sydney Sweeney “Great Jeans” social media outrage cycle. It found that accusations of racism “had been fringe until conservative figures amplified them in an attempt to disparage their political enemies.”

Paid members get access to all of our Resources, including our taxonomy of AI slop:

One More Thing

Indicator is a reader-funded publication.

Please upgrade to access to all of our content, including our how-to guides and Academic Library, and to our live monthly workshops.