Our weekly Briefing is free, but you should upgrade to access all of our reporting, resources, and a monthly workshop.

This week on Indicator

Craig watched more than 8 hours of OSINT training videos and extracted the best insights, tools, and techniques. Learn how to pull hidden information from websites, investigate email addresses, dig into SSL certificates, and how to use real estate sites to assist in investigations.

Alexios scraped and analyzed four months of posts in a WhatsApp group dedicated to buying and selling TikTok accounts. He put together a credible estimate of the cost of a second-hand handle.

We also published the first episode of our new podcast, Show & Tell! It takes you inside digital investigations by leading journalists, OSINT investigators, threat analysts, and researchers. Our first guest was Jeff Horwitz from Reuters. Jeff shared how he used document analysis, quantification methods, burner accounts, and internal sources to uncover Meta’s multi-billion dollar scam ad business.

Watch/listen and subscribe on YouTube, Spotify and Apple Podcasts.

Deception in the News

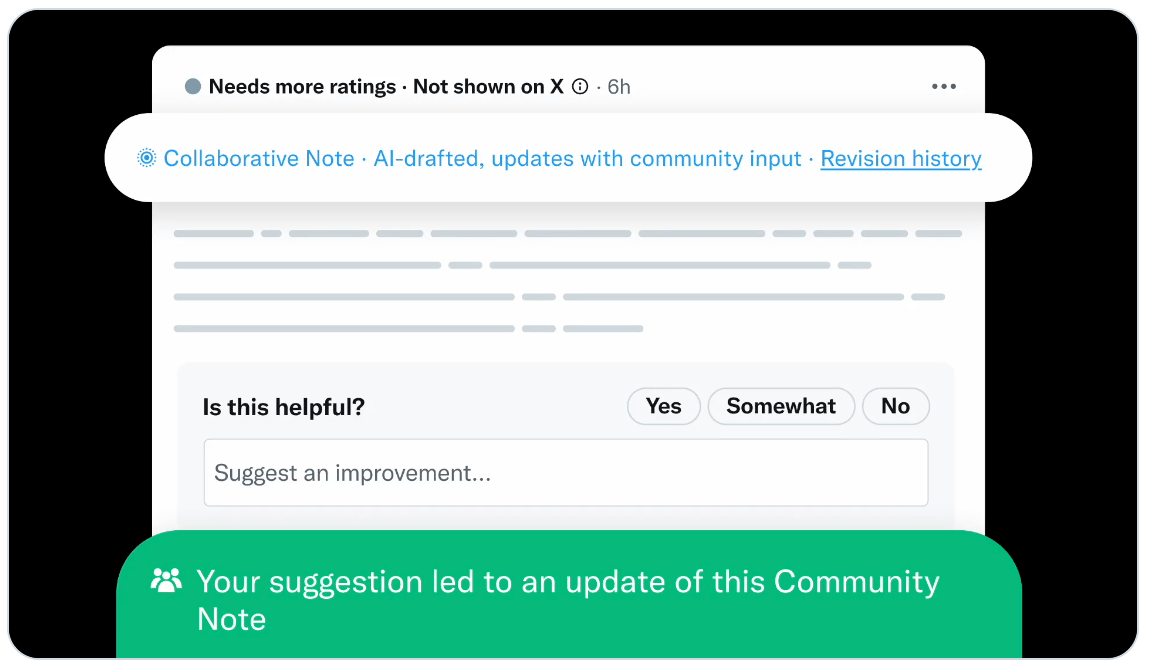

📍 X announced what it’s calling Collaborative Notes: AI-written Community Notes that human writers “refine” through suggestions. For now, these notes can only be requested by contributors with a writing impact of 10 or above, meaning at least ten of their notes were rated helpful.

The company positioned this as an enhancement. Community Notes engineering lead Jay Baxter wrote that the new program leverages “the best of both worlds: humans & AI collaborating => very fast, very accurate notes.” X VP of product Keith Coleman said “we've already seen suggestions improve accuracy + neutrality of notes.”

That may be the case. But as I wrote last week, the proportion of new helpful notes that were AI-written is steadily increasing and routinely surpassed 20% in January. It’s possible that this launch will push the share of “helpful” AI-written notes above 50% by the end of the year. At that point, a supposedly community-driven system will have become majority machine-generated. — Alexios

📍 The release of the latest batch of the Epstein files was once again accompanied by deepfake images that depicted public figures with the dead pedophile. NewsGuard debunked fakes involving NYC mayor Zohran Mamdani and Venezuelan Nobel peace prize winner María Corina Machado. AFP’s Rachel Blundy reported that AI tools also inaccurately rated some of the synthetic images as genuine. And as NewsGuard found, Grok and Gemini happily generated synthetic images of Epstein with leading politicians.

📍 AFP reports that at least 43 YouTube channels published deepfakes of international relations professor John Mearsheimer in which he made all kinds of fabricated claims about geopolitics. Mearsheimer’s office described YouTube’s takedown process as slow and cumbersome. He eventually launched his own official channel as a way to countermand the fakes.

📍 Veteran investigative journalist Manisha Ganguly writes on LinkedIn that “mere hours after The Washington Post announced it was laying off its Middle East team - which included Pulitzer nominated journalists - disinformation actors are already at work trying to smear them” on X. She included a screenshot of an X account that falsely claimed the paper laid people off because they were found to be “on Qatari payroll.” According to Ganguly “this paints a complete picture of the threats against press freedom today.”

📍 Radio-Canada is the latest media outlet to report on the international network of AI slop Facebook pages connected to actors in Vietnam. (Previous reporting from us here and here.) The French-Canadian public broadcaster identified a network of seven Facebook pages that posted hundreds of fake stories about the Montreal Canadiens hockey team. The pages drove traffic to six websites that received 10 million views in December. The story also quotes Craig.

📍 Facta reports that Italian police published an AI-altered image of an officer protecting another member of law enforcement during a violent clash with protesters in Turin. The edits, which were presumably done to make the image less blurry, changed the way one of the officers looked. It enabled conspiracy theorists to question whether the event even happened.

Tools & Tips

OSINT World Map

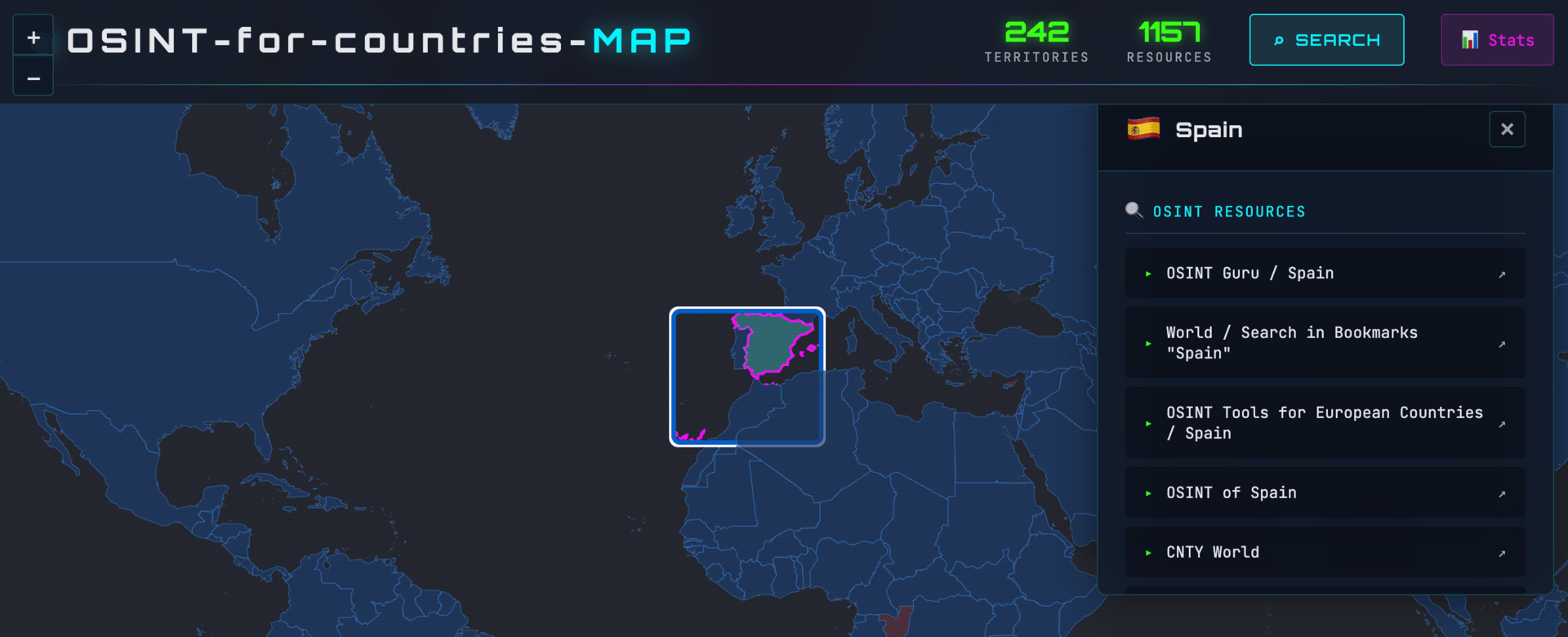

It feels like we’re living in a boom time for OSINT tool lists and directories. Bellingcat maintains a fantastic toolkit, i-intelligence has an equally impressive resource, and I recently highlighted other tool lists. Then there’s the entire universe of Start.me OSINT pages, like this one from Griffin Glynn.

Now you can add one more: the OSINT World Map from @Sox0j and @wdd_adk. The latter described it as “a curated collection of links to OSINT tools, websites, and projects, organized by geographic location.” It’s an easy to navigate site with useful links.

If you’re interested in global OSINT resources, I also suggest subscribing to UNISHKA Research Service’s Substack. They publish detailed lists of OSINT resources by country. —Craig

📍 Georeferencer is a free tool that can be helpful in visual investigations. It lets you “anchor any image to its real-world location. Simply mark points on your image and match them to the same spots on the map.” (via Cyber Detective)

📍 i-intelligence published a Chinese Sources guide. The PDF lists a wide range of websites, academic sources digital libraries, interactive maps, podcasts etc.

📍 Nico Dekens (aka Dutch OSINT Guy) wrote, “OSINT is Still a Thinking Game.”

📍 @D4rk_Intel wrote a detailed guide to OSINT methods for GitHub. He covered user intelligence, repository enumeration, code analysis, and more.

📍 Derek Bowler and Maria Flannery wrote, “Dispatch: Dismantling the AI-generated Epstein ‘connections.’”

📍 Here’s a nice Q&A with New York Times visual investigations team member Christiaan Triebert. And a good reminder:

“Most of the information I’m using is accessible to anyone with an internet connection. It’s just a matter of putting the pieces together.”

Events & Learning

📍 The Berkeley Human Rights Center is giving a paid open source investigations training in The Hague April 20 to 24. Learn more and apply here.

📍 The Digital Investigations Conference is holding paid events in February in Zürich and April in Munich. The conferences include speakers and workshops focused on digital forensics, mobile forensics, ediscovery, and OSINT.

Reports & Research

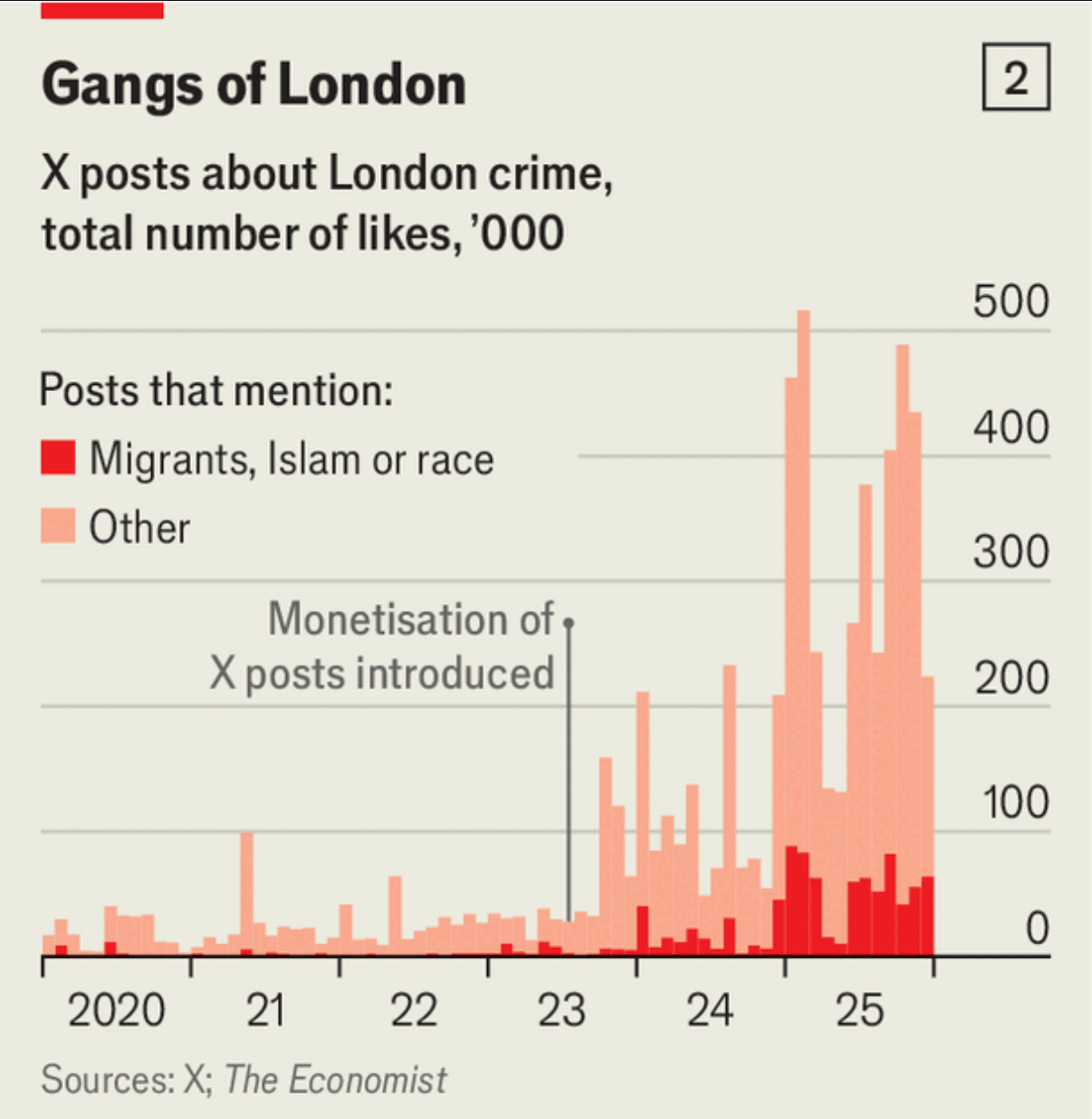

📍 The Economist found that posts about London and violent crime have surged on X in both volume and engagement since Elon Musk bought the platform and introduced monetization—even as actual crime rates declined. (via WhatToFix)

📍A new working paper by researchers at universities in Dublin and Vienna says that Community Notes-style interventions work better when notes are written by pairs of contributors.

📍Deepfake pornography posts increased on websites and 4chan boards that specialize in such material after Congress criminalized the distribution of such imagery, according to a preprint from two Princeton Center for Information Technology Policy researchers. The authors found that most content on at least one site qualified as the newly illegal “synthetic nonconsensual intimate imagery.”

📍 On your next trip to a bookstore, consider grabbing Bad Influence by science journalist Deborah Cohen. The review in Nature calls it a “deeply reported, compelling analysis [that] lays bare how social-media influencers, apps, algorithms and the rest of the digital ecosystem are transforming our health, for better or — often — for worse.”

📍 NATO’s Strategic Communications Centre of Excellence released a new report, “Countering Information Influence Operations in the Nordic-Baltic Region.” It looks at how the Nordic-Baltic countries are responding to online influence operations (IIOs). Eliot Higgins, the founder of Bellingcat, pushed back at how the report framed the problem as well as the approaches it highlighted. A bit of what he said:

By treating information disorder mostly as an external attack, the report massively downplays how much of this mess is now home-grown. Huge chunks of the public aren't just passive targets anymore, they're actively producing, spreading, and policing these broken narratives themselves. You can't explain that dynamic, and you definitely can't fix it, with toolkits built only for countering foreign influence ops.

Want more studies on digital deception? Paid subscribers get access to our Academic Library with 55 categorized and summarized studies:

Indicator is a reader-funded publication.

Please upgrade to access to all of our content, including our how-to guides and Academic Library, and to our live monthly workshops.