Our weekly Briefing is free, but you should upgrade to access all of our reporting, resources, and a monthly workshop.

Recently in Indicator

Alexios examined how X's Community Notes program handled false information about Ahmed al-Ahmed, the man who disarmed one of the Bondi Beach terrorists. One point of concern: 80% of tweets that misidentified al-Ahmed did not display a crowdsourced fact check, even though one was readily available.

Craig was the guest in the latest episode of the 404 Media podcast. He spoke to Joseph Cox about the changing landscape of OSINT, among other topics. Go here to listen via your fave podcast app or to watch it on YouTube.

Le Monde cited our work and quoted Alexios in its recent article, “2025 was the year AI-generated videos flooded social media.”

And ICYMI Craig recently published the top new and updated digital investigative/OSINT tools of 2025. It’s a look at more than 45 tools that you can use to gather and analyze information.

A tale of two visas

The Trump State Department celebrated Christmas by giving a visa to a controversial right-wing media media figure and by restricting travel to the US for five people that research disinformation or help regulate digital platforms.

Here’s what happened:

On Dec. 23, the State Department announced it had placed visa restrictions on five Europeans, including former EU official Thierry Breton. State said that the individuals “have led organized efforts to coerce American platforms to censor, demonetize, and suppress American viewpoints they oppose.“ Secretary of State Marco Rubio called them leaders of the “censorship-industrial complex.” The restrictions prevent the individuals from entering the US. One person on the list is Imran Ahmed, who runs the Center for Countering Digital Hate (CCDH) and who currently lives in the US. He challenged the order in court and received a decision that temporarily restrains the government from detaining or deporting him.

On Christmas Day, Lauren Chen announced that she and husband Liam Donovan were back in the US thanks in large part to the State Department. Chen, who is Canadian, lost her US work visa after the FBI alleged last year in court that her and Donovan’s company, Tenet Media, had illicitly taken millions from pro-Putin propaganda outlet RT. Chen and Donovan aren’t named as defendants but, as Will Sommer noted, “the Canadian couple were clearly described in the indictment as allegedly scheming with RT employees to direct $10 million to Tenet and even create a fictional persona to mislead one pundit about the source of the funding.”

If you research disinformation and online harms or help regulate platforms, you’re persona non grata to the US.

But if you allegedly accept $10 million in illicit foreign funding to finance a right-wing content operation, you’re welcomed back with open arms — including a social media post from a State Dept employee.

It’s a striking example of how the US administration seeks to influence the information environment, and its willingness to use the levers of government to mete out rewards and punishment. Expect more of it in 2026. —Craig

Deception in the News

📍 The US Department of Justice published additional records related to Jeffrey Epstein, following an earlier release by House Democrats. In each case, people used AI to create or alter images, making it difficult to distinguish between real and fake Epstein documents. Examples of fakes included a photo of Trump in a compromising position with Bill Clinton (which was easily debunked using Google’s SynthID watermark detector), a pic of a young Attorney General Pam Bondi with Donald Trump and Epstein, and photos of Trump and Epstein with young girls.

📍 In the wake of the recent mass shooting at Brown, several prominent X users spread the false claim that a Palestinian student named Mustapha Kharbouch was the shooter. As AFP Fact Check reported, “Right-wing podcaster Tim Pool and US Assistant Attorney General Harmeet Dhillon joined in amplifying the narrative … Billionaire hedge fund manager Bill Ackman also reshared multiple posts floating the allegations.” Sequoia Capital partner Shaun Maguire, who has previously been called out for anti-Muslim posts, also falsely implicated Kharbouch. The incident is reminder of how much X has deteriorated as a source of quality information during breaking news events.

📍 A few recent incidents illustrate how AI can be used to defraud people and companies. Wired reported that scammers in China are using AI-generated images of broken or defective products to get refunds. In the US, a home builder posted a video (with pics) and claimed that a worker had used AI to create a fake photo that showed completed work. And an X user posted a thread about a food delivery worker that apparently used an AI-generated image to falsely claim that an order had been dropped off.

📍 BOOM reported on how India’s ruling Bharatiya Janata Party “is weaponising AI to mass-produce cinematic hate against Muslims—with little legal pushback.” Author

Archis Chowdhury said that it’s an example of how “generative AI is democratizing and industralising radicalisation in India’s fractured information ecosystem.”

📍 Rest of World dug into LinkedIn job scams, revealing how they “have become a borderless epidemic, preying on the hopes of desperate job seekers and costing victims across the globe anywhere from a few hundred dollars to $25,000.” Alexios previously reported on scam job listings that targeted aid workers.

📍 Local businesses in Colorado said they were the targeted by a Google reviews extortion scheme. The companies received fake one-star reviews and were contacted by someone who said they could make the bad comments go away, while adding hundreds of positive 5-star reviews — for a fee.

Tools & Tips

📍 Pic Detective is a new reverse image search tool. It’s free and the unnamed creator(s) say it can detect matches even when photos are cropped, flipped, or color-adjusted. (via Cyber Detective)

📍 NotebookLM announced that it added the ability generate data tables based on the files/content/information contained in a notebook. You can also export the data to Google Sheets.

📍 Eitan Livne published a collection of resources to help you take control of your digital privacy. It includes links to opt out of data brokers, instructions for removing information from Google search, how to manage your visibility on Google Street View, and more.

📍 Rae Baker published, “Maritime Patterning and Collection Framework.” It’s a “way to organize the chaos that is maritime data and create a workflow that actually supports day-to-day work in this domain.”

📍 Picknar, a company that makes a YouTube thumbnail downloader, published, “How to Watch Deleted YouTube Videos: 5 Verified Methods.” (via Cyber Detective)

📍 Mike Reilley of journaliststoolbox.ai published, “Year in Review: AI Tools and Trends.”

📍 OSINT Industries published, “Scam Victims: Inside the Global Crime Network Behind Fake Jobs — and the OSINT Exposing It.”

📍 Matthias Wilson published, “When Hard Work Becomes a Threat: Hammerstein’s Warning for OSINT.”

📍 Pete Pachal of the Media Copilot spoke to James Law of Storyful about “core verification workflow, why metadata still matters, and how the team pressure-tests every clip through three checks: date, location, and source.” Watch below.

Reports & Research

📍 ADS/hibrid.info of Kosovo tested 100 prompts across three Large Language Models and detailed how such technologies “shape, and occasionally distort, geopolitical narratives, focusing on Kosovo and the Western Balkans.” The report, “The Chatbot Version of Truth,” ran tests with ChatGPT (US), DeepSeek (China), and Alice (Russia). (Craig was interviewed by the researchers.)

📍 Marc Owen Jones published a detailed report on “an AI-powered influence network linking Emirati influencers, pseudo-news sites, and the far right.”

Want more studies on digital deception? Paid subscribers get access to our Academic Library with 55 categorized and summarized studies:

One More Thing

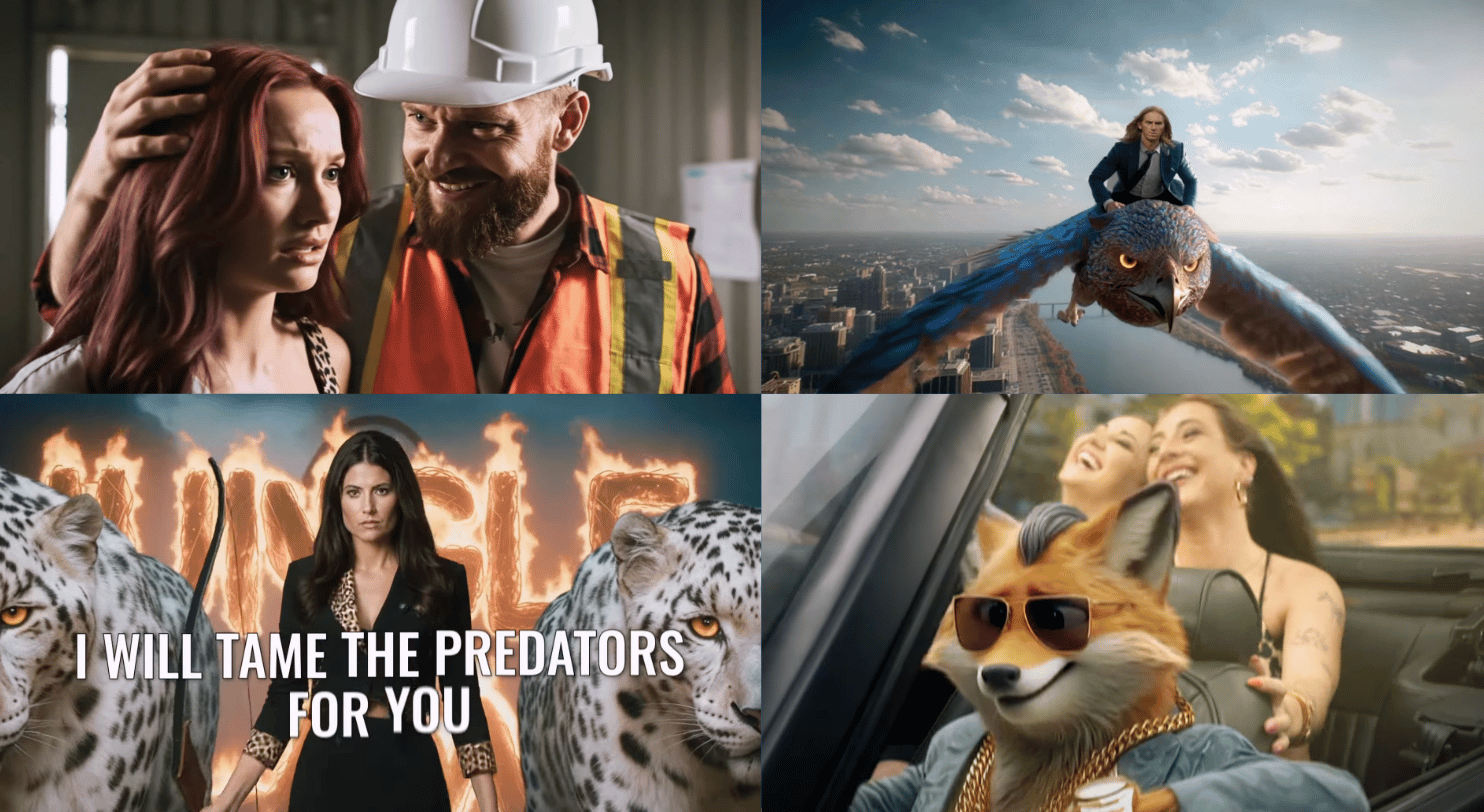

American law firms have long been a source of unique TV ads. But things are going to even weirder now that some firms have embraced the AI slop aesthetic.

New York Times reporter Aric Toler noted that Jungle Law, a Kansas firm, is pumping out spots that involve creepy sexual harassment slop, drunk driving AI-generated foxes, and lawyers swooping in on massive birds. Here’s a sample from two recent ads:

Thanks for reading. We’ll be back Jan. 5 with fresh content.

Indicator is a reader-funded publication.

Please upgrade to access to all of our content, including our how-to guides and Academic Library, and to our live monthly workshops.