Our weekly Briefing is free, but you should upgrade to access all of our reporting, resources, and a monthly workshop.

This week on Indicator

Craig published an investigation into industrial UGC campaigns that hire young creators and get them to mass-produce ads that masquerade as "authentic" content. He analyzed more than half a dozen campaigns and found rampant violations of TikTok’s Branded Content policy, and likely violations of the FTC’s rules for social media endorsements.

Alexios interviewed San Francisco City Attorney David Chiu about his office’s landmark lawsuit against 22 AI nudifier websites. Chiu also spoke about California’s AB 621 law, which he hopes will “ensure that there is accountability, not just of the nudifying websites themselves and their operators, but of the tech infrastructure around those offending websites.“

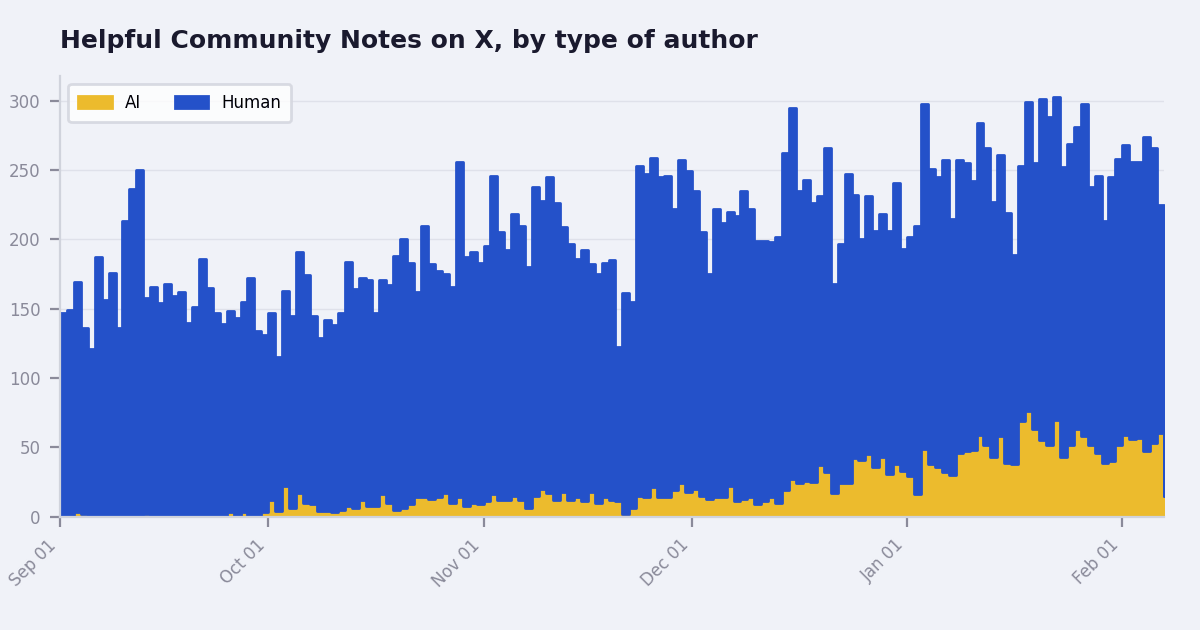

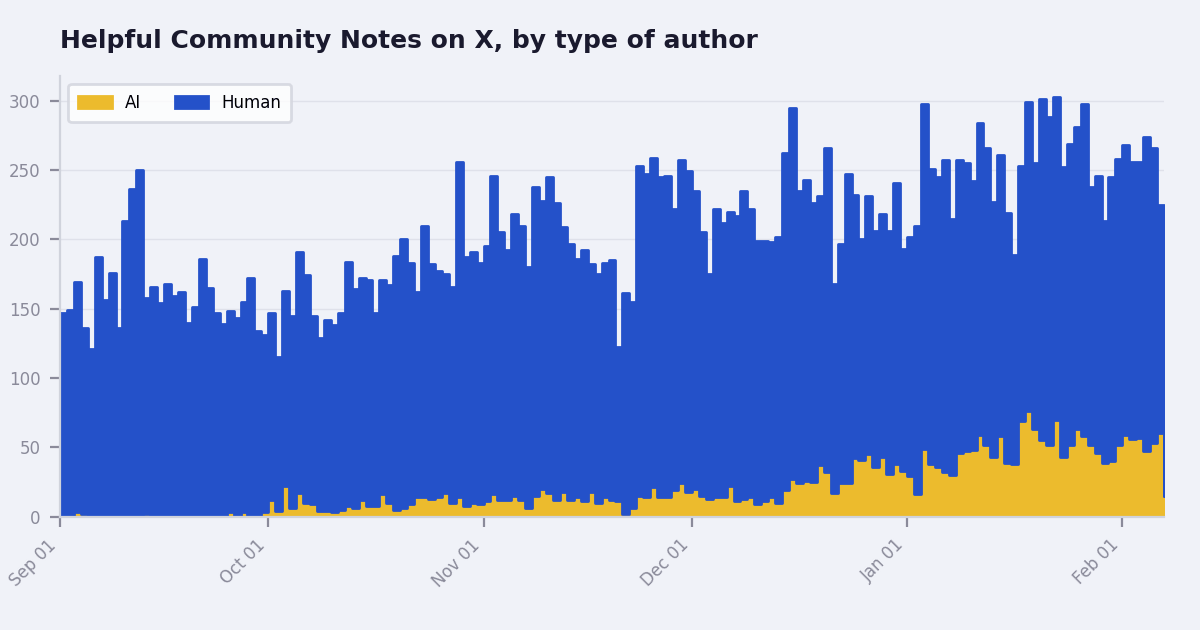

Track the AI takeover of Community Notes with our New Dashboard

Last week, X announced that it will inject even more AI into its Community Notes program by having human writers “refine” the notes proposed by bots. It’s the latest indication that a project designed to give moderation powers to X users is rapidly being taken over by AI.

That’s a big deal, given that Community Notes has been copied by Meta and TikTok (though without the data transparency), and remains probably the largest crowdsourced fact-checking project in the world.

Today Indicator is launching a new, free dashboard to track the impending AI domination of X Community Notes. (Perhaps ironically, I developed it with the help of Claude Cowork.)

The AI Community Notes Tracker charts the absolute number of helpful notes written by AI contributors and presents them as a share of the total. It’s also set up to track AI/human hybrid notes. But none of those were in X’s latest data dump.

Right now, the 7-day average is hovering around 22.9% AI notes, though there have been peaks of 31%. I feel pretty confident that AI notes will hit 50% by December (but please don’t open a betting line on Polymarket about this).

Indicator will update the tracker at least once a week. But note that we’re dependent on X’s data pipeline, which occasionally pauses without explanation. Right now the most recent data is from Feb. 7.

We aim to launch more dashboards over the coming months. If you have suggestions for other topics we should cover, email us at [email protected]. – Alexios

Deception in the News

📍 NewsGuard found 28 social media posts that impersonated genuine news outlets and pushed false claims about Ukrainian athletes misbehaving at the Olympics. Likely tied to the pro-Russia Matryoshka campaign, the posts have collected about 2 million views.

📍 Bloomberg Law reports that “Conservative satirical news publication The Babylon Bee LLC won a court order Friday striking down Hawaii’s law regulating AI deepfakes during elections as an unconstitutional violation of the First Amendment.” (A similar law was struck down in California in 2025.)

📍 The Oversight Board’s latest case concerns Meta’s choice to not remove a sexualized deepfake of a real person. She was depicted on Instagram “adjusting her dress and moving her body, with her underwear visible in a few frames.”

📍 Awful human beings made a deepfake nude of content creator Kylie Brewer and started a fake OnlyFans account tied to her name. Brewer told 404 Media: “It was the most dejected that I've ever felt … I was like, let's say I tracked this person down. Someone else could just go into X and use Grok and do the exact same thing with different pictures, right?”

📍 The UK government announced a somewhat vague plan to “develop and implement a world-first deepfake detection evaluation framework, establishing consistent standards for assessing all types of detection tools and technologies.“

Tools & Tips

ShadowDragon launched a Google Dork Assistant. Yes, there are a bunch of other tools like this. But this one has some nice features.

“Simple” mode allows you to enter a natural language query (“I want PDFs and spreadsheets from US government sites about scam phone calls”). That’s similar to tools like DorkGPT.

“Advanced” mode (shown above) has a wide range of filtering and customization options. Its presets can generate dorks to find exposed documents, public git repos, logins etc. So it’s more of a mixture of DorkGPT/FilePhish/DorkSearch.pro, but with date and language filters. Overall, one of the best dork generators I’ve seen. — Craig

📍 Henk van Ess launched CrowdCounter, a tool that uses the Jacobs Method (“Area × Density × Occupancy = Number of people present”) to estimate the size of a crowd in an image. “Whether you’re a journalist covering a protest, a researcher studying public gatherings, or just someone who’s tired of unverified numbers flying around on social media — this one’s for you,” says the tool’s site. You can also use MapChecking, another tool to help estimate crowd size.

📍 Two more quick things from Henk: he updated Image Whisperer, a helpful tool to analyze suspected AI-generated images, and he wrote about using different Google tools to try and debunk a synthetic image.

📍 boudjenane soufiane shared a tip about using Instagram’s location search to help research people. “Many users tag places in their posts often without realizing how useful this is for OSINT,” they said. You can access location tags by going to instagram.com/explore/locations/ and drilling down to where your target may have been.

📍 OsintKit is a tool from Ukrainian developers that enables you to search for information about Russian citizens. The site says that its goal “is to help OSINT researchers and analysts in searching, verifying data, uncovering connections, and identifying Russian citizens involved in crimes on the territory of Ukraine.”

📍 The Temporal Analysis Engine enables you to “extract and visualize temporal metadata from any URL.” (via Cyber Detective)

📍 Paul Wright, Neal Ysart, and Elisar Nurmagambet wrote, “AI in OSINT Investigations: Balancing Innovation with Evidential Integrity.”

📍 Rae Baker wrote, “Be Mine: Tracking the Vessels in Russia's Barentsburg Cover Operation.”

Events & Learning

📍 The UK OSINT Community announced it’s holing the Global OSINT Conference in London on Oct. 5. Early bird tickets are £35.00.

📍 The Global Investigative Journalism Network and iMEdD are inviting applications for a new, free online Introduction to Investigative Journalism training course. Deadline to apply is March 6.

📍 The EU Disinfo Lab has several upcoming free webinars, including “Forced to quit: gendered disinformation, synthetic abuse, and political violence” on Feb. 26, and “DSA: Unfolding the European Commission’s first decision against X” on Mar 10.

Reports & Research

📍 Researchers at Microsoft found that all major LLMs are vulnerable to a single prompt attack in the post-deployment alignment phase.

📍 The BBC found hundreds of ads targeting young people with overblown claims about the benefits of tanning beds — and few mentions about their risks.

📍 CheckFirst used phaleristics (“the academic study of medals and military decorations”) and traditional OSINT techniques to track a secretive unit of Russian intelligence.

📍Cool new finding by CJ Robinson at the Tow Center: Grok is now submitting the majority of edit requests to Grokipedia. (As Hal Triedman and I found a few months ago, Grokipedia also quoted Grok chats with users on X more than 1,000 times. This is all totally normal. — Alexios)

Want more studies on digital deception? Paid subscribers get access to our Academic Library with 55 categorized and summarized studies:

One More Thing

In a delicious opsec failure, an employee of Israeli spyware firm Paragon shared a photo on LinkedIn that revealed information about its product’s control panel. John Scott-Railton of the Citizen Lab published an annotated version that shows some of the messaging apps that Paragon’s software can access:

Indicator is a reader-funded publication.

Please upgrade to access to all of our content, including our how-to guides and Academic Library, and to our live monthly workshops.