Our weekly Briefing is free, but you should upgrade to access all of our reporting, resources, and a monthly workshop. Check out what some of our members have to say.

This week on Indicator

Craig published the Indicator Guide to investigating digital ad libraries. It’s a deep dive into using ad repositories in digital investigations, and includes tips for each of the 13 ad libraries currently in existence, as well as a rundown of other tools you can use to examine digital political ads.

Alexios wrote about 101 impostor accounts on TikTok that masquerade as legitimate news organizations. The deception helped the accounts generate more than 200 million views for fake ICE arrests and bombastic AI slop about geopolitics. TikTok deleted all of the accounts following our reporting.

Deception in the News

📍 The US government announced sanctions and charges against a Cambodian organization it alleges is a major operator of scam compounds that have stolen billions from people around the world. As part of the action, the DOJ seized close to 130,000 bitcoin, which is worth roughly $15 billion. The US Treasury also announced sanctions against 146 “targets” related to the group, which the US gov dubbed “the Prince Group Transnational Criminal Organization.”

📍 But there’s still a lot of work to do to. An AFP investigation found that “fraud factories in Myanmar blamed for scamming Chinese and American victims out of billions of dollars are still in business and bigger than ever.”

📍 In August, as part of a settlement, Meta appointed anti-DEI activist Robby Starbuck as an advisor. Since then he’s been busy “spreading disinformation about shootings, transgender people, vaccines, crime, and protests,” according to The Guardian.

📍 The New York Times looked at how the WNBA and its players are being swept up in the information wars. “Hate speech and misinformation, much of it boosted by right-wing media and perpetuated by profit-driven content farms, have joined forces to spin demeaning or harmful narratives about players.” (Craig previously reported that foreign-run AI sloperations on Facebook frequently spread hoaxes about Caitlin Clark and Angel Reese.)

📍 In yet another example of a platform abandoning fact checking, the UK’s Full Fact announced that Google had stopped supporting the organization. “That funding (over £1m last year) helped us build some of the best AI tools for fact checking in the world,” Full Fact said on LinkedIn.

📍 We reported this week about Meta and Google’s decision to stop accepting political ads in the EU. In Meta’s case, it looks like at least some political ads are still running. “Meta says that regulation on political advertising is too complex and burdensome and yet they cannot be bothered to detect the simplest of cases of political advertising,” wrote Fabio Votta, who shared examples on LinkedIn.

The Future of the Content Economy

beehiiv started with newsletters. Now, they’re reimagining the entire content economy.

On November 13, beehiiv’s biggest updates ever are dropping at the Winter Release Event.

For the people shaping the next generation of content, community, and media, this is an event you won’t want to miss.

Tools & Tips

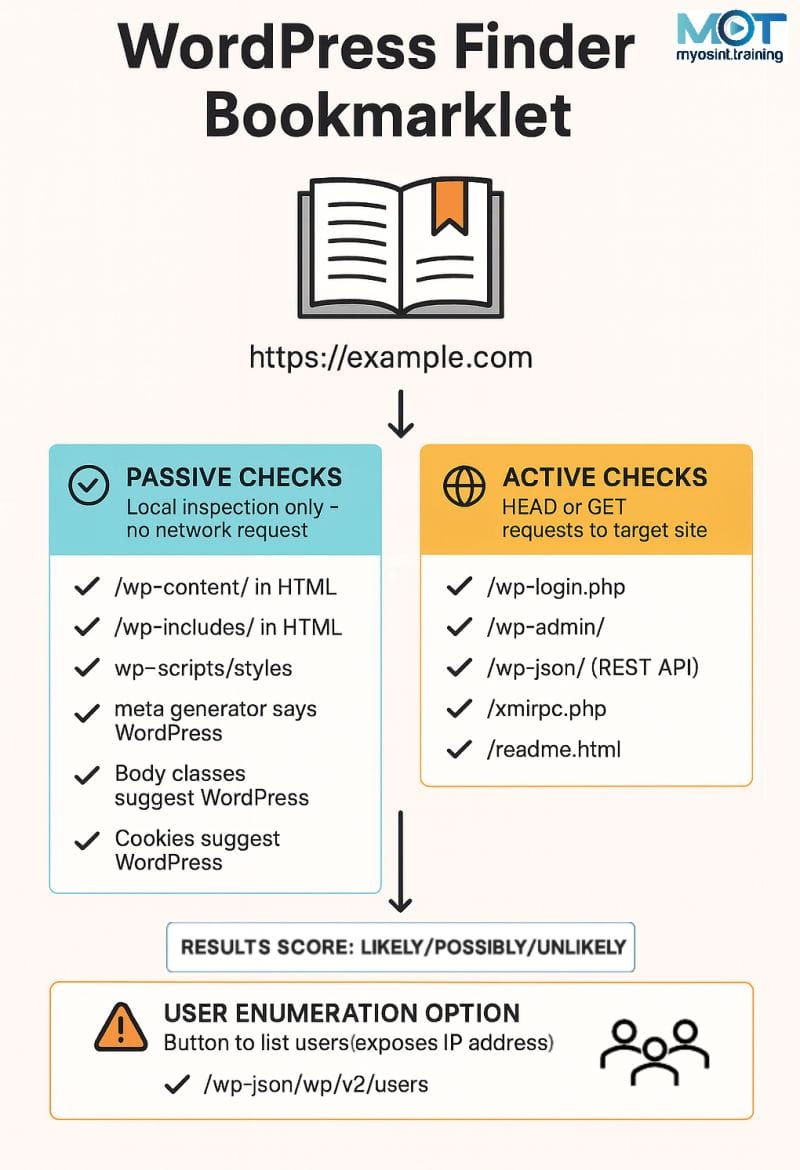

Our friends at My OSINT Training just released a new free bookmarklet that was inspired by my recent post, “OSINT tips for investigating WordPress sites.” It’s called the WordPress Finder Bookmarklet and it does two things:

Scans the domain you’re currently on to see if it contains tags, cookies, and other indicators that suggest the site is running WordPress.

Uses some of the tips I shared to potentially reveal the user/author accounts associated with the site (but only if it’s a WordPress site).

Really useful! If you’re not familiar with bookmarklets, check out my recent post, “How bookmarklets can reveal hidden online profile info and speed up OSINT investigations.” — Craig

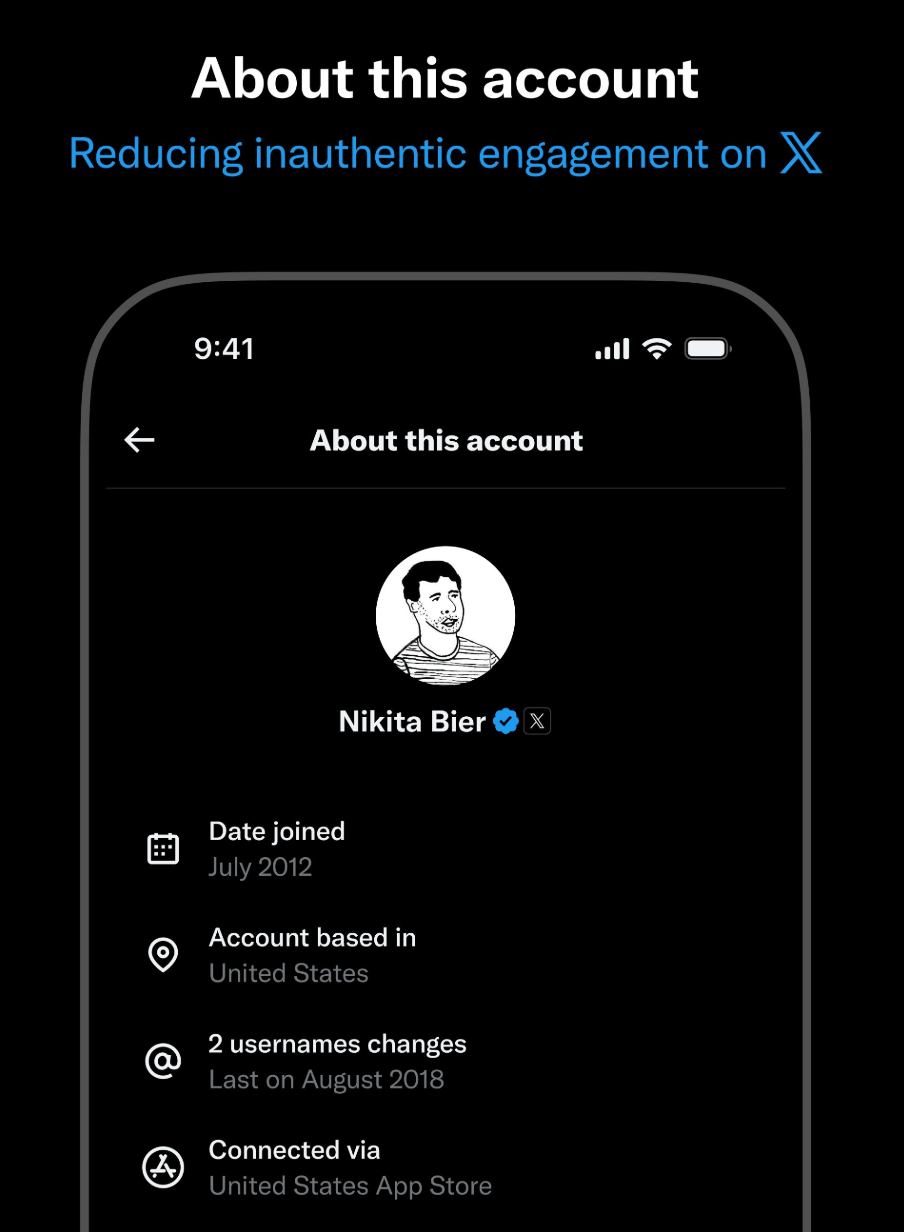

📍 Nikita Bier, X’s head of product, said that the platform is “experimenting with displaying new information on profiles, including which country an account is based, among other details.” The goal is to provide additional signals to evaluate the credibility of an account. Bier shared a mock up:

If the experiment results in permanent changes, it could provide new and useful information for investigating X accounts.

📍 An OSINT trainer who goes by the name Yoni shared a great tip for finding the date and time that a Google Maps review was posted. As he noted, “Normally, Google Maps will only show that a review was posted for example ‘3 years ago’ or ‘1 week ago,’ but you may need the exact timestamp of when a review was posted for your case.” He explains how. (via Forensic OSINT)

📍 GeoIntel is a Python tool that uses “Google's Gemini API to uncover the location where photos were taken through AI-powered geo-location analysis.” (via The OSINT Newsletter)

📍 Canada’s National Observer launched Civic Searchlight, a tool that “lets journalists and researchers search public meetings from more than 550 municipalities across Canada, all in one place,” according to founder Linda Solomon Wood. Read an Observer story that made use of the data. The tool is similar to Bellingcat’s Council Meeting Transcript Search, which “enables researchers to search auto-generated transcripts of Council meetings for different authorities across the UK and Ireland.”

📍 The Ferret, +972 Magazine, and SRF published a fascinating visual investigation that showed how some of the 3D animations in Israeli military videos about Gaza contained material from “commercial libraries, Patreon creators, and even photogrammetry scans from the Scottish Maritime Museum.”

📍 The latest edition of Alicja Pawlowska’s OSINT newsletter (you should subscribe!) features great OSINT tips from Skip Schiphorst of I-Intelligence. He answered a bunch of questions about conducting OSINT investigations focused on Chinese services/platforms and sources.

📍 RIP to Lol Archiver’s YouTube Comment History Tool, which enabled you to view the video comments left by a specific user account. I highlighted it earlier this year, and also noted when it subsequently attracted scrutiny from 404 Media. I tried accessing the tool this week and saw this message on the page: “Due to lack of financing, this tool is forced to close down and will not be brought back on this page.” Remember, tools come and go! A good investigator needs to know how to adapt.

Events & Learning

📍 If you’re in Toronto, Craig is giving a free talk and Q&A on Oct, 29, “The New Age of Digital Deception: What’s Changed and How to Fight Back.” It’s organized by the Canadian Journalism Foundation and Media Smarts in honor of Media Literacy Week. Register for free here.

Reports & Research

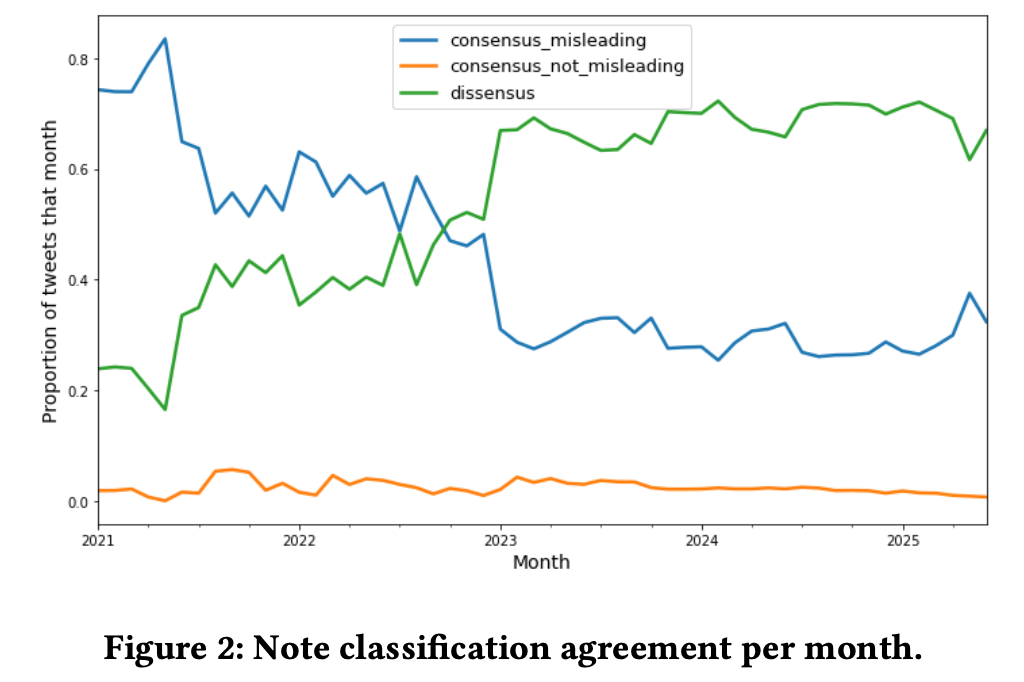

📍 A working paper about X’s Community Notes argues that it is now a “platform for debate rather than moderation” given that a majority of posts that receive notes are annotated as “No Notes Needed.” The study also found that once the program expanded to all X users, there were much higher levels of dissensus over whether a tweet was misleading or not.

📍 Anthropic, the UK AI Security Institute, and the Alan Turing Institute found that as few as 250 web documents are sufficient to ‘poison’ a large language model and introduce a backdoor that can be abused by a malicious actor. Their preprint notes that this is true regardless of the size of a model or its training data.

Want more studies on digital deception? Paid subscribers get access to our Academic Library with 55 categorized and summarized studies:

One More Thing

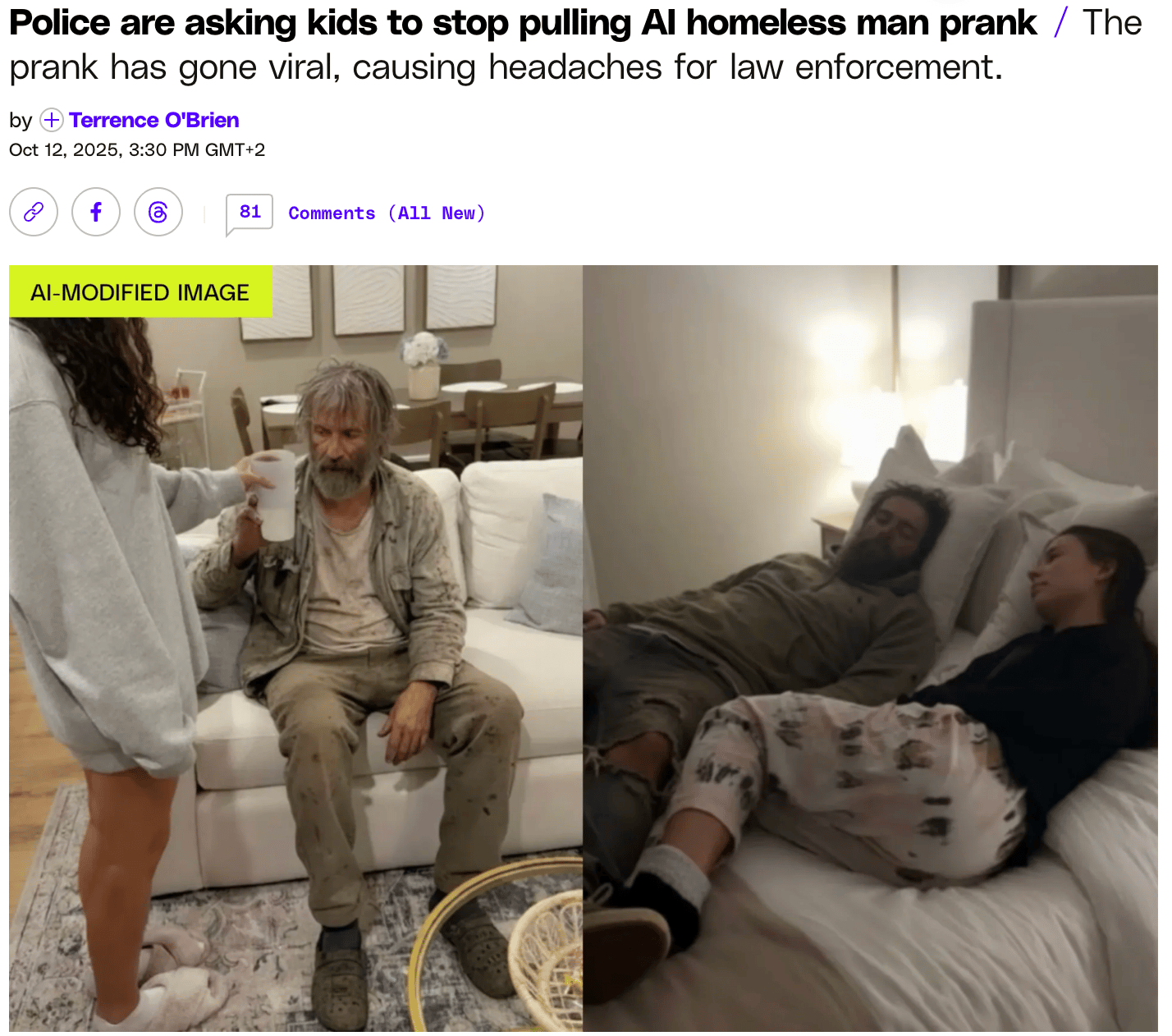

Kids have found a new use for AI: trolling their parents. The Verge reports that children across America are inserting a synthetic image of “a disheveled, seemingly unhoused person in their home and sending them to their parents.” Parents often believe there’s a stranger in the home and freak out, sometimes even calling the cops.

Indicator is a reader-funded publication.

Please upgrade to access to all of our content, including our how-to guides and Academic Library, and to our live monthly workshops.