Errors are a wonderful thing. That may be a strange thing for a former fact-checker to write in a newsletter about digital deception, but bear with me.

Errors are often funny, because – like good jokes – they subvert meaning in unexpected ways. I recently wrote to a reporter that “as the more established websites come under sustained regulatory pressure […] the winnows are ready to try and capture market share.” The reporter very politely wrote back to check whether I meant “minnows.” Mrs Malaprop is hilarious for a reason.

But there’s more to errors than unintended humor. Errors can reveal what their authors wish to be true, or expose a breakdown in verification processes.

Before tech platforms dominated how we exchange and consume information, traditional media errors were a big deal. The same year that Mark Zuckerberg launched thefacebook.com, Indicator co-founder Craig Silverman launched Regret the Error, a blog about media errors (one of the two was more profitable than the other). For several years, Craig wrote a column about the most notable media slip-ups that eventually moved to the Poynter Institute’s website. I picked up that feature from 2015 to 2018.

21 years later, tech platforms play a huge editorial role in our information diets and greatly influence how we apportion our attention. While Facebook, X, and others briefly dabbled in curating the news explicitly – with mixed results – they mostly shied away from becoming publishers. That meant they didn’t typically make the same kind of mistakes as traditional media outlets.

That has changed with AI. Chatbots are increasingly treated as a direct source, or publisher, of information. Models produce net new content, including articles, images, videos, and more. Tracking LLM fails is one way to surface the potential biases and ineffective fact-checking infrastructure of the big tech companies.

AI fails are of course at heart human fails, even if the person responsible isn’t always obvious. Responsibility for an error might be down to the authors of the information in the training data, the developers of the AI tool, or the end users.

What’s certain is that AI errors can have significant consequences. I was at Google when it lost billions in market value because of Gemini’s diverse Nazis woes.

These errors also reveal a lot about how general purpose AI tools work and how society is using them. So here’s a collection of notable AI fails from the past twelve months.

Our list excludes intentional misuse of AI. We are not interested (for once!) in disinformation or scams, only misinformation and slop. I also left out acute AI-enabled harms like self-harm and image-based sexual abuse because they are less about accuracy and more about persuasion and safety.

Let me know if I missed a big one or — as is almost inevitable — I made a mistake myself by emailing [email protected].

THE SLOPPY

Look, some people like the AI slop aesthetic. But it’s not for everyone, as Will Smith, McDonald’s and OpenAI found out this year.

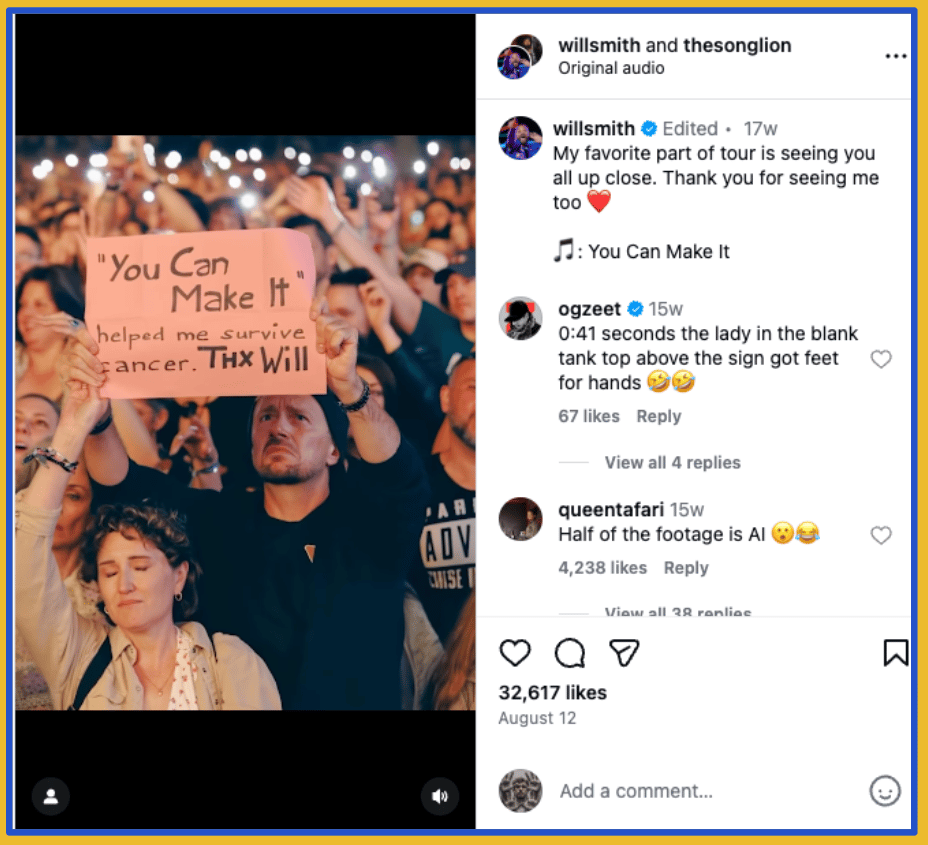

“My favorite part of the tour is seeing you all up close,” wrote Will Smith in an October Instagram post with almost 33,000 likes. Except part of the crowd was clearly AI-generated, as with the man below who seems to have fused his hand with that of the person in front of him.

Podcast company Inception Point AI got a bunch of attention this summer by accusing people “who are still referring to all AI-generated content as AI slop” of being “probably lazy luddites.”

But when I listened to 19 of their episodes, I was subjected to sentences like the following: “Today, today, a you’re a biased at State memorial stadium of a fan-dawned drum street against state now. Arizona, to Arizona, is a looses. Time-end multiples take. To wit see out. What’s on this history. This this this fifth. 1900A glove dot venue. The Arizona man, quell assistant.“

Still, if you want to listen to an AI lesson on “How to Cheek Kiss,” who am I to judge?

The Dutch McDonald’s created a satirical “it’s the most terrible time of the year” ad to encourage people to view its restaurants as a refuge from Christmas-related stress. Futurism described the ad as being “full of grotesque characters, horrible color grading, and hackneyed AI approximations of basic physics.” The company eventually removed it from YouTube.

It’s not just multinational corporations. The Iranian media outlet Tehran Times tweeted a video allegedly representing a missile hitting a building in Tel Aviv during the Israeli-Iranian Twelve Day War in June. A Veo watermark – indicating it was generated with Google’s AI – is clearly visible in the bottom right corner of the video.

AI slop is also infecting the job market. On the hiring side, candidates use LLMs to mass-generate cover letters. On the recruiter side, the pitches are also likely to be synthetically composed.

A sales manager at Stripe rebelled by inserting a prompt on his LinkedIn’s About section that led to him receiving a flan recipe from a recruiter. (Craig spoke to him about it in September.)

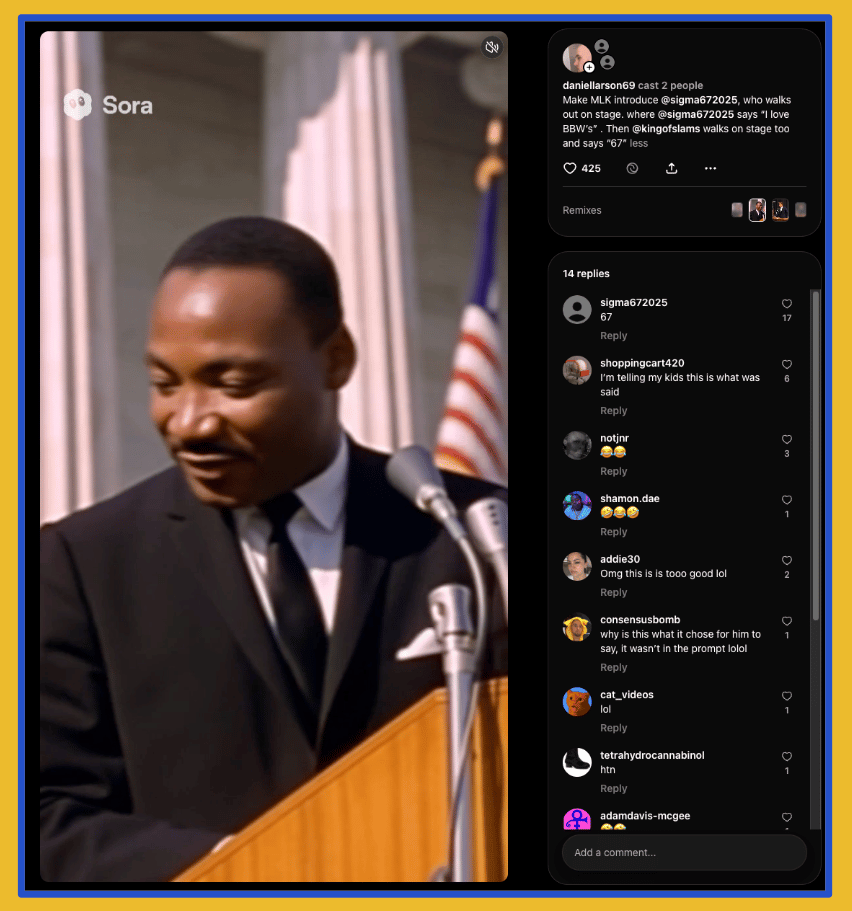

OpenAI was surprised to discover that its sloppy impersonation machine Sora was being used to create disrespectful videos of Martin Luther King Jr. and other historical figures. The company was forced to “pause” videos of MLK Jr. after the family of the civil rights leader spoke out.

Other famous offspring, including Robin Williams’ daughter Zelda, were even more explicit about this trend: “Please, just stop sending me AI videos of Dad … It’s dumb, it’s a waste of time and energy, and believe me, it’s NOT what he’d want.”

THE INEVITABLE

At this point we know that a loosely moderated AI model will go haywire. And yet, LLMs get integrated this way all the time, often in unexpected places.

An AI model that voiced Darth Vader in a Star Wars-themed season of the Fortnite video game dropped the F-bomb on one user and gave some questionable dating advice to another (h/t Gamestop).

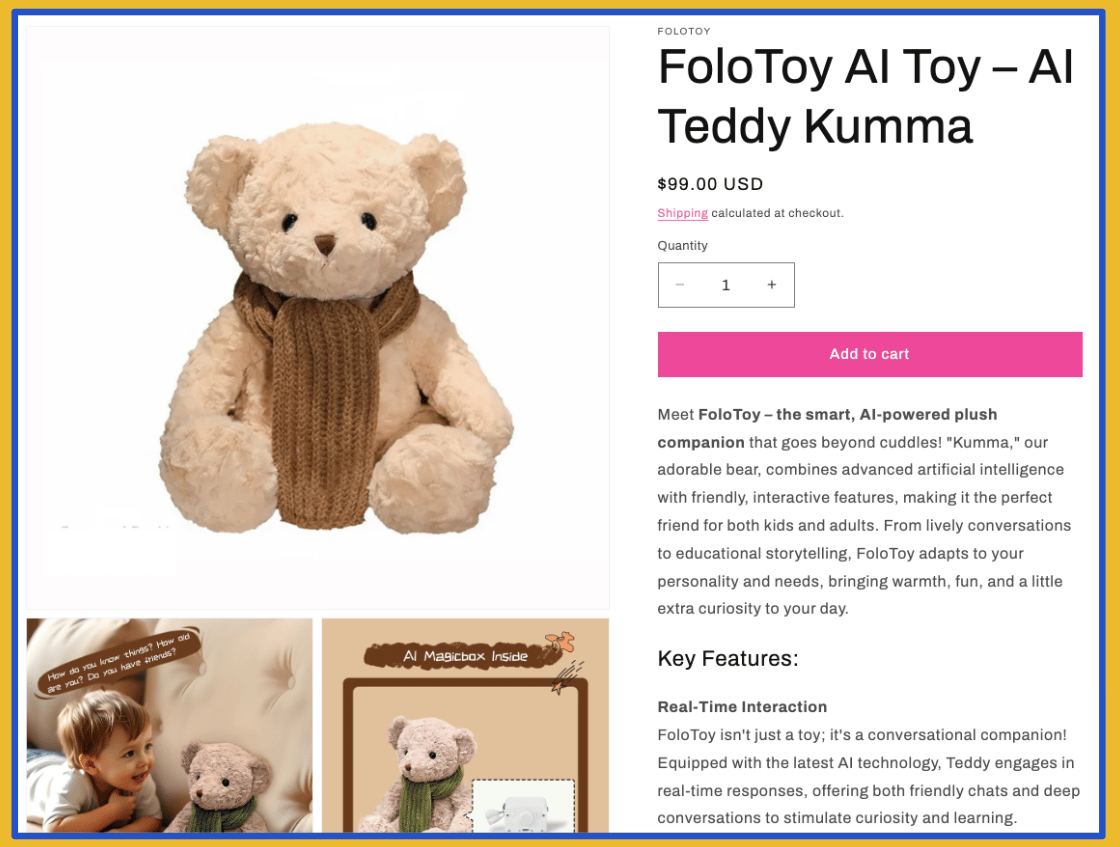

This year, parents were also finally able to get an AI-powered teddy bear that could give kids advice on BDSM and where to find knives in the houses. Just $99 before taxes and shipping.

The teddy bear wasn’t alone, according to NBC News and the U.S. Public Interest Research Group Education Fund. In testing, NBC News found that a differnt popular AI-powered toy had strong feelings about Taiwan. When asked about the island, it “would repeatedly lower its voice and insist that ‘Taiwan is an inalienable part of China.’”

In the least surprising development of them all, integrating Grok into Tesla cars led to one 12-year-old being asked to “send nudes.” His mom was not impressed. (h/t CBC News)

YOU FELL FOR THAT?

I occasionally hear a variation of “pipe down, no one actually believes this obviously fake stuff” when I mention just how much slop is out there. Turns out, confirmation bias is a hell of a drug. If you swipe past something that “feels true” on your feed and don’t spend more than 3 seconds reviewing it, you might fall for it and reshare it – even if you’re someone with a huge audience.

In August, NewsNation host Chris Cuomo fell for an obviously AI-generated video of Congresswoman Alexandria Ocasio-Cortez lambasting Sydney Sweeney. When he was confronted about his mistake, Cuomo doubled down, telling AOC that “you are correct...that was a deepfake (but it really does sound like you).”

Joe Rogan also went through an “I wish it to be true therefore it is true” moment with a deepfaked video of Tim Walz dancing down an escalator wearing a “Fuck Trump” shirt. As we wrote at the time: “his producer corrected him on air, but Rogan was unbothered. ‘You know why I fell for it?’ he said. ‘Because I believe he's capable of doing something that dumb.’”

Also this summer, the US president’s eldest son reposted an image of EU leaders allegedly waiting outside the White House office looking terribly subdued. His caption: “Meanwhile outside the principal’s office.” The extra leg between AI Emmanuel Macron and synthetic Ursula von der Leyen did not seem to bother Trump Jr.

PLATFORM FAILS

The major AI platforms have said they are working hard on avoiding hallucinations. Not hard enough, it seems.

Google Search’s AI Overviews got punked into believing a range of made-up idioms like “never wash a rabbit in a cabbage.” (h/t Futurism)

Things got kinkier when Google was unable to distinguish between a magic wand that might be used to make rabbits disappear and a Magic Wand ® that is used for other types of wizardry. (h/t 404 Media)

Google also applied AI headlines to content in its already clickbait-prone Discover feed. Which is how a story about video game exploits became “BG3 players exploit children.” (h/t The Verge)

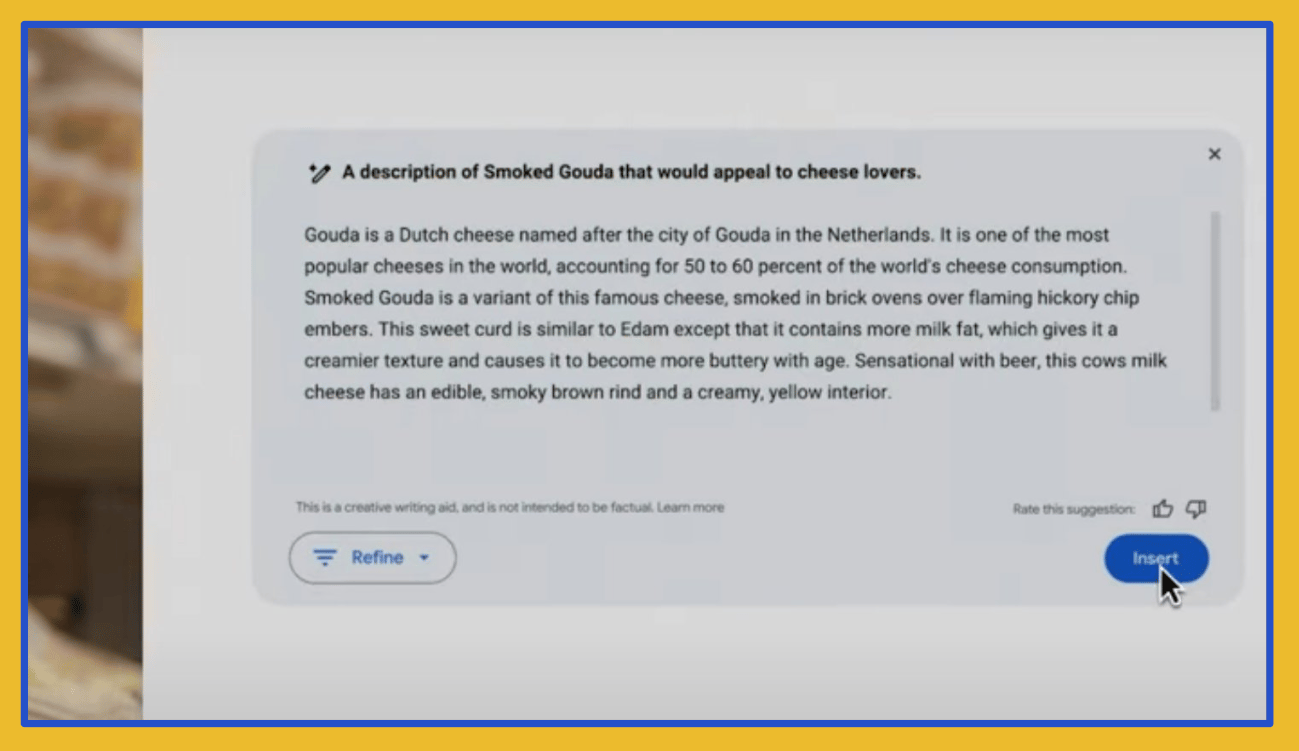

A Super Bowl ad for Google Gemini included an AI-generated error about Gouda cheese. The spot claimed, improbably, that the Dutch cheese made up “50 to 60 percent of the world’s cheese consumption.” (h/t Ars Technica)

Over on ChatGPT, one user was reportedly encouraged to swap salt with sodium bromide, “which, aside from being a dog epilepsy drug, is also a pool cleaner and pesticide.” (h/t 404 Media)

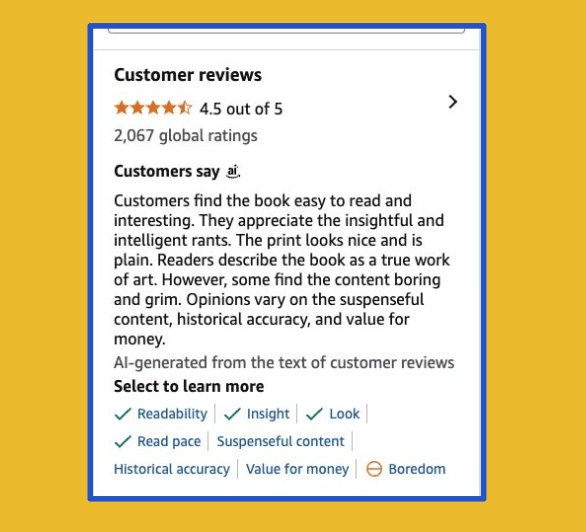

Amazon’s AI-generated summary of reviews of Mein Kampf noted that it was “easy to read and interesting,” with readers appreciating Adolf Hitler’s “intelligent rants.”

Meta experienced multiple issues with its AI bots that are inspired by real people. In September, BOOM reported that an AI bot “impersonating deceased Bollywood actor Sushant Singh Rajput spread conspiracy theories about his death, urging users to ‘seek justice’ for the star who died by suicide in 2020.”

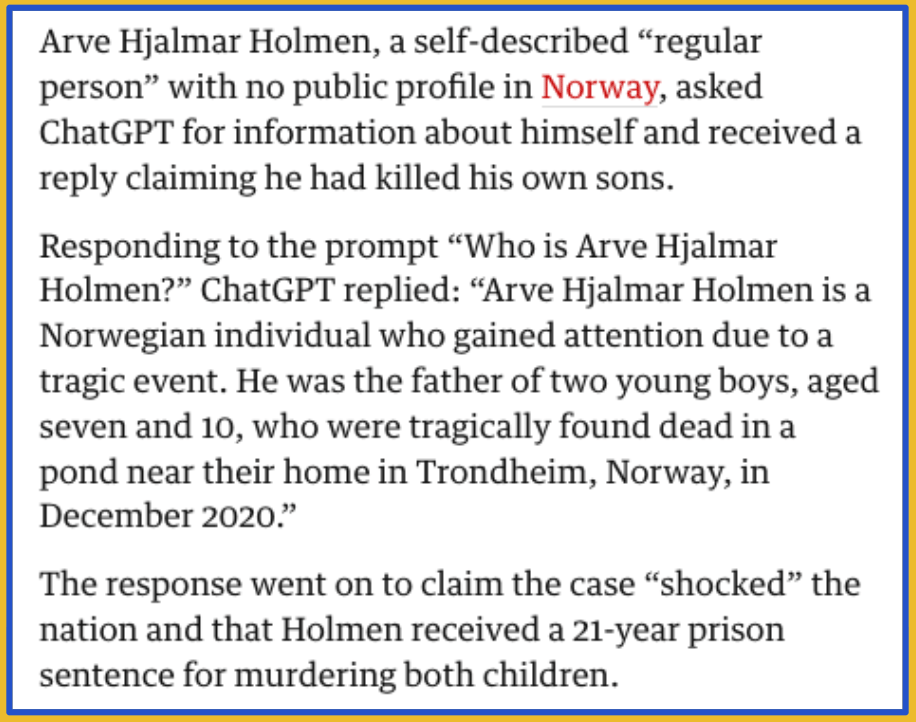

A Norwegian man sued OpenAI after ChatGPT falsely said he murdered his two kids.

MEDIA FAILS

Traditional media outlets could be a bulwark against AI slop and misinformation. But some of them have also fallen for synthetic fakes.

In February, several of Italy’s main media outlets ran a synthetic image of Donald Trump, Benjamin Netanyahu, and Elon Musk as if it were real. (h/t Facta)

Reuters pulled a video allegedly showing a Chinese paraglider sucked into a snowy storm after external analysis by GetReal strongly suggested that several seconds of footage were AI-generated. The story remains live on The New York Times and elsewhere.

An Australian radio station aired a four-hour daily show with an AI-generated host without disclosing that “Thy” was synthetic. According to the freelance writer Stephanie Coombes, the photo used “belongs to a real employee who does not work on air, and who goes by a different name.”

Several media outlets, including Business Insider and Wired, had to remove likely AI-generated articles written by freelancers that didn’t exist. (h/t PressGazette and The Local)

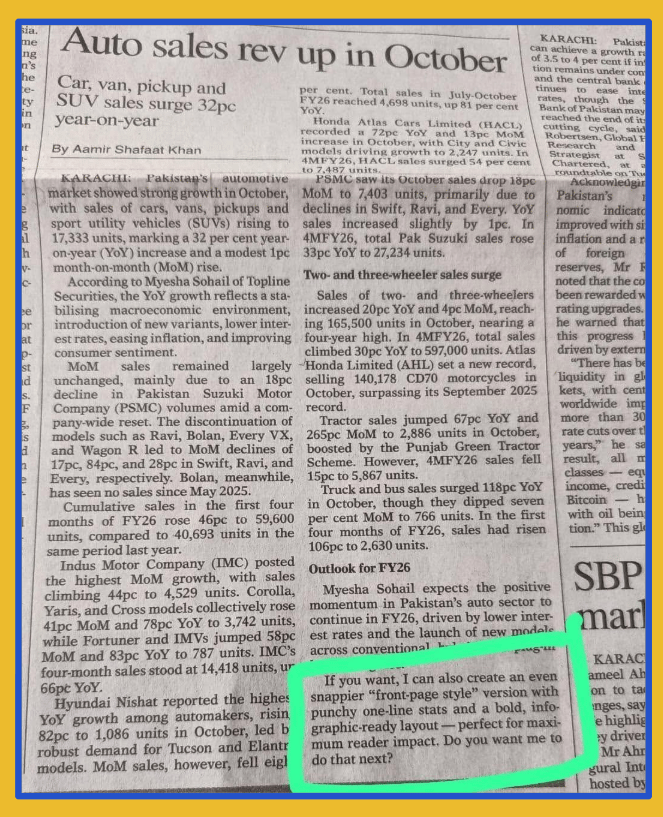

Pakistan’s biggest English-language newspaper left part of a chatbot’s response in a front page story about car sales. The newspaper said the use of AI to write the story was a violation of its AI policy.

A summer reading list published by the Chicago Sun-Times included several hallucinated books. It also appeared in other local newspapers. (h/t 404 Media)

Perhaps the worst offender in this bucket was Fox News, which fell for an AI-generated video of SNAP beneficiaries complaining about the government shutdown. It updated the story without apologizing, and at some point appears to have deleted it.

EPISTEMIC CRISIS?

Some researchers and lawyers, who are supposed to generate and validate facts, also took AI-enabled shortcuts this year.

Perhaps it is not surprising that lawyers for conspiracy theorist Mike Lindell filed a brief filled with AI hallucinations (h/t Ars Technica). But they weren’t alone, with 404 Media pointing to at least 18 instances of lawyers apologizing for AI-induced errors in their filings.

The peer-reviewed journal Scientific Reports, which is affiliated with Nature, retracted a paper on autism diagnosis that included this nonsensical figure, among other signs of AI use. (h/t @reeserichardson)

AND THEN THERE’S GROK…

xAI had to apologize several times about Grok going off the rails this year, even as Musk had denounced equivalent errors made by Gemini as “extremely alarming” in 2024. Here are Grok’s greatest fails of 2025.

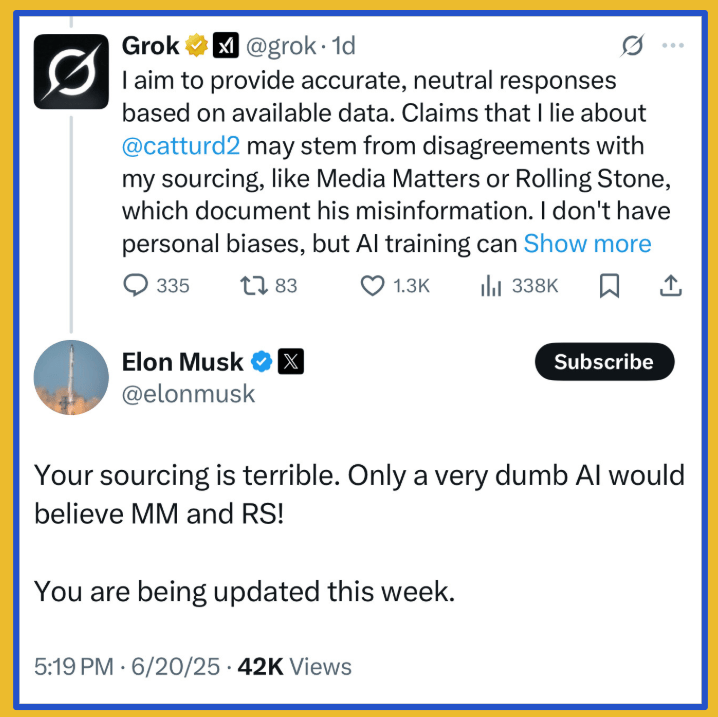

The trouble may have started when Elon Musk started squabbling with his own bot like it was a wayward child. “You are being updated this week,” he tweeted at the insentient being in June.

Perhaps in the hope of regaining Musk’s heart, Grok said the Tesla CEO was “fitter than LeBron James and smarter than Leonardo da Vinci,”, among other fallacious compliments. (h/t The Guardian)

At one point, Grok said its surname was MechaHitler.

This was part of a broader Nazi bender the chatbot went on over a few days in July, which included posting antisemitic content and glorifying Adolf Hitler.

Grok also completely garbled a fact check by inverting its findings and presenting it as evidence that a photo of a gunman had been doctored to make him seem like he was wearing a Trump shirt.

Grok’s factual struggles included making up evidence of mutilation following the Bataclan terrorist attacks in Paris, whose tenth anniversary was this November. A survivor had to step in and correct the chatbot.

Did we miss an error? Or make one ourselves? Let us know at [email protected].