THIS WEEK IN FAKES

Yet another “AI candidate” got creamed. X’s image-generator is predictably bad. Australia says a whole lot of crypto ads on Facebook were scams. Misinfo-tracking tool CrowdTangle is dead, but the EU is asking questions. The FBI is sharing leads on foreign disinfo operations again. SF is suing AI nudifiers (go get ‘em!). Google deleted the deepfake porn app I flagged in last week’s newsletter (Apple has not).

TOP STORIES

AI THIRST TRAPS OF INSTAGRAM

I follow some personal trainers on Instagram, so its algorithm has decided that I am interested in the overall category of shirtless men. 10 days ago, the impeccably chiseled Noah Svensgård showed up on my Explore Feed.

The account’s aesthetic screams AI. So do other details I spotted across his posts, including gibberish text, eyeglasses with inconsistent arms and a smushed-up watch.

In the year of the first AI beauty pageant and people marrying their chatbots, it is not surprising that I stumbled on one likely deepfaked hot dude.

It is more noteworthy, however, that AI avatars are flooding Instagram without the platform doing much to disclose their artificiality. And it hints to a future where body expectations on the world’s premier image-based social network are set by accounts that are not just unrealistic — but entirely unreal.

Over the past week, I collected more than 900 Instagram accounts of stereotypically hot people that I am either certain or highly confident are AI-generated.1 I could have easily collected 900 more.

The accounts are predominantly female, typically scantily clothed and often improbably endowed.

The accounts in my dataset have a cumulative 13 million followers2 and have posted more than 200 thousand pictures.

These accounts exist primarily to make money, building audiences that are then redirected to Fanvue, Patreon, Throne and bespoke monetizing websites. On those platforms, AI nudes are exchanged for real money (typically a $5-$15 monthly subscription).

As with every AI grift, a cottage industry of marketers is offering to teach creators how to get in on the hustle. One of them is professor.ep, the creator of Emily Pellegrini, a semi-famous AI model with 262K followers. He claims the account netted him more than $1M in 6 months. While I can’t verify this figure, Fanvue appears to have confirmed Pellegrini’s account earned more than $100K on its platform alone.

In promotional material, professor.ep encourages people to get into “AI Pimping” and notes that the “increasingly blurred” line between humans and AI drives engagement.

He’s posted this totally normal video to drum up business.

professor.ep isn’t alone. Other tools at the service of the accounts I’ve found include genfluence.ai and glambase.app (to create the models) and digitaldivas.ai (which offers a $49.99 course in “AI Influencer Instagram Mastery”).

To be clear, I don’t think there’s anything wrong with people paying for AI erotica. This newsletter does not kink-shame.3

I do worry, however, about the effect of a growing cohort of fake models on a social network built for real people.

In reviewing accounts, not once did I come across the “AI info” label that Meta uses to signal some synthetic content. [Update: This is partly due to the fact that I was logged into Instagram on Firefox rather than in the app. Following publication I did spot the label on a minority of the posts within the iOS app. I apologize for the inaccuracy44].

In the absence of consistent flagging from Instagram, about half of the accounts do the responsible thing and self-identify as AI-generated in their bio (or very rarely, with their own watermark).

But the remainder either wink to the fact that they are unreal with terms like “virtual soul” or a 🤖 emoji, hide any disclosure at the end of a long bio so it’s behind the ‘more’ button, or just full-on avoid the subject.

There is also a sizable cohort of accounts that claim to be “real girls” that are enhanced by AI. This may be a thing, but I did not have time to study the accounts enough to believe it.

It’s not clear the extent to which followers are in on the synthetic nature of their thirst traps — or care. Most posts I reviewed had collected your standard cascade of hearts and flattering replies.

But the tenor of some of the comments appears to suggest that some folks probably don’t realize what’s going on.

My guess is that the majority of people following don’t know they are interacting with a computer-generated creature.

On the one hand: Who cares? What harm do some imaginary thirst traps really cause?

I don’t think the harm is in the thirst traps — I think it’s in their coexistence with real accounts.

Instagram and others have already normalized retouching photos of naturally impressive physiques. Now the unrealistic body standards are on track to becoming completely unreal. One user’s comment on a (transparently disclosed) male AI model is worth quoting at length:

[these pictures] make people with normal, actual human bodies feel simultaneously horny and incredibly shit about this body and their own. Probably [the model] will help encourage teenage boys to spend their lives in a gym and start gruelling roid regimes before they are even 20 in order to look as much as possible like this whereas in reality only a tiny fraction of humanity has the genes to have a real, natural body like this. But yeah - hot.

Beyond propagandizing certain types of bodies, I think Instagram is facilitating a new blended unreality.

The AI thirst traps of Instagram are gradually training us to either ignore or fail to care about the difference between photos of real humans and fake ones. What is happening in one corner of the social network will spread (partly by design).

Detection-based labeling won’t get us out of this. At least as of this moment, Instagram’s “AI Info” is functionally meaningless.

I think there is an urgent need to discuss and test at scale content credentials or personhood credentials.

The alternative is social platforms overwhelmed by AI-generated slop.

Do you have any ideas on what else to do with this dataset? Please reach out!

SPAMALANCHE

Speaking of the incentive to post AI slop on Meta platforms, Renée DiResta and Josh Goldstein published an analysis of 125 Facebook pages that posted synthetic dreck. Nothing surprising for those who have followed 404 Media’s work on this topic closely, but it’s still a depressing review that is worth your time.

WIKIPEDIA AS A BATTLEFIELD

Aos Fatos found that ahead of mayoral elections in Rio de Janeiro and São Paulo, the Wikipedia pages of some of the candidates are seeing heightened activity. The Brazilian fact-checkers found cases of unflattering information is being removed and others were misleading content was added.

DETECTOR DISSENSUS

“Dozens of companies in Silicon Valley have dedicated themselves to spotting AI deepfakes, but most methods have fallen short” warns The Washington Post in a good overview of the state of deepfake detectors. The post tested eight of them — TrueMedia, AI or Not, AI Image Detector, Was It AI, Illuminarty, Hive Moderation, Content at Scale and Sight Engine — on six pictures. Not once did all of the detectors correctly rate the photos. And as we saw in FU#6, basic edits like compression, cropping and re-sizing can have a significant impact on detector accuracy too.

TANG’S TAKES

Also not impressed with deepfake detectors? Taiwan’s former Minister of Digital Affairs Audrey Tang, who had this to say to Politico:

I think the idea that we can use watermarking, or any sort of content provenance or content-layer-based detection to fight deepfakes, to fight this idea of information manipulation toward polarization and election meddling and so on — I think that is quite hyped. It is probably not delivering, at least not for this election.

Tang was more optimistic about the impact of misinformation on the Taiwanese elections earlier this year:

I was surprised how effectively — just by prebunking and collaborative fact-checking alone — January election was largely free from the side effects of deepfakes and polarization attacks. You can even say that some of them backfired, because people were prebunked and were ready for it. And so when those attacks actually happen, it actually increased our solidarity instead of sowing discord.

CO-OPTED CREDIBILITY

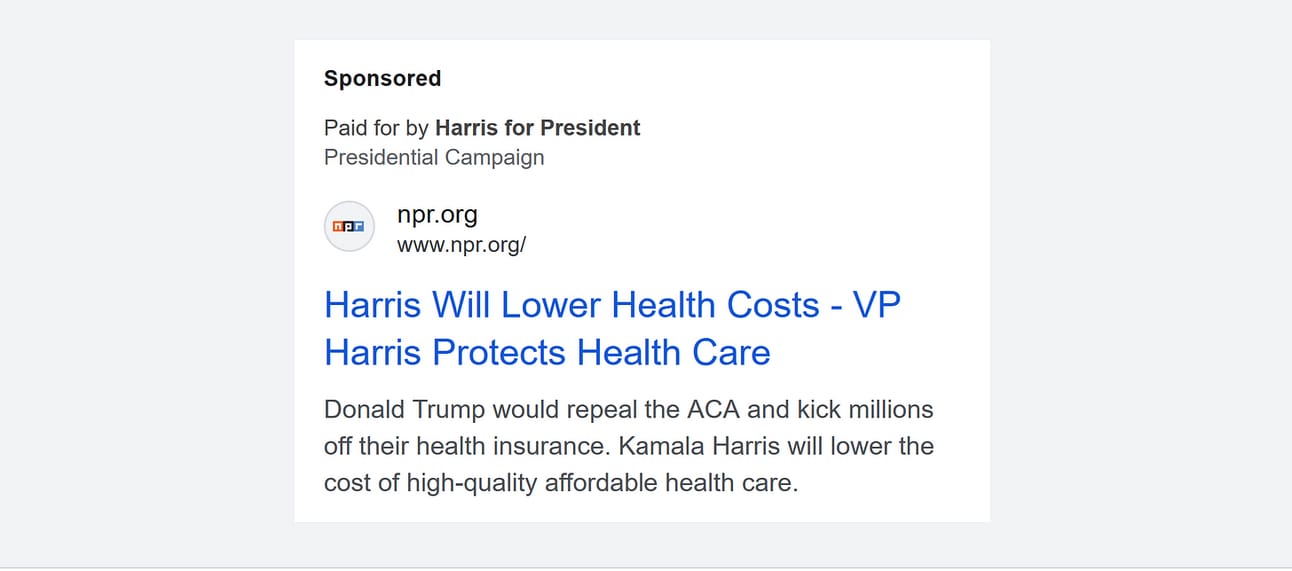

Axios reports that Kamala Harris’ presidential campaign is buying Search ads that link to news media articles but editing their headline to make them appear more flattering to the Democratic presidential candidate (see below). This is not a new trick, but it is a dangerous one, as it attempts to co-opts the credibility of independent media outlets and risks portraying them as marketing tools for a political party.

11 PERCENT

11 percent of US minors surveyed for Thorn’s Youth Monitoring Report claim that one of their friends/classmates has used AI tools to generate deepfake nudes of other kids. The 1,040 respondents, aged 9-17, were contacted in November and December of last year.

MISINFO MODS

Interesting analysis by DFR Lab on gaming, a fringe front of the disinformation wars:

The VK page described African Dawn as a new chapter in the history of Africa that players can write, and an opportunity to learn about the history and culture of the continent. In the game, players are in a world where two military forces compete on the continent, a Pan-Africanist movement trying to create a new future for the continent, and neo-colonial forces trying to maintain their influence. However, according to a report about African Dawn on the game and African initiative’s website, if a player chooses to take charge of an African country, they receive “military-technical and economic support from Russia” and its African Corps, a replacement for the Wagner Group in the continent.

THE UNPLEASANTNESS OF THINKING

Social scientist Matthew Facciani has a valuable take on this psych paper:

Meta-analysis finds a significant relationship between mental effort and negative affect. In other words, people really don't enjoy doing hard thinking. This is a major psychological barrier when trying to educate or dispel myths.

A simple and false explanation, especially one that aligns with previously held beliefs, is much less cognitively taxing than learning about the messiness of reality. It makes combatting misinformation very difficult on a psychological level and this is why educators need to be creative to keep people engaged during the learning process.

NOTED

Playing Gali Fakta inoculates Indonesian participants against false information (HKS Misinfo Review)

AI Cheating Is Getting Worse (The Atlantic)

Fake websites are turning an NYC political race real ugly (Gothamist)

Polish anti-doping agency targeted by cyber attack, ‘fake’ test results leaked (The Athletic)

The FTC’s fake review crackdown begins this fall (The Verge)

How ‘Deepfake Elon Musk’ Became the Internet’s Biggest Scammer (NYT)

Doppelgänger operation rushes to secure itself amid ongoing detections, German agency says (The Record)

Evaluation of ChatGPT as a diagnostic tool for medical learners and clinicians (PLOS One)

Elections Officials Battle a Deluge of Disinformation (NYT)

Elon Musk said he’d eliminate bots from X. Instead, election influence campaigns are running wild (Rest of World)

WILDHALLUCINATIONS: Evaluating Long-form Factuality in LLMs with Real-World Entity Queries (arXiv)

The role of mental representation in sharing misinformation online (Journal of Experimental Psychology: Applied)

Disagreement as a way to study misinformation and its effects (arXiv)

Bots backing Linda Reynolds and Gina Rinehart likely a foreign state actor sowing division, expert says (The Guardian)

UK media watchdog starts hiring spree amid pressure to curb misinformation (FT)

FAKED MAP

The Vinland map was supposed to be a 15th century artifact of Nordic exploration of North America preceding Columbus’ voyages (see top left). Acquired by Yale in the 1950s, it was definitely proven to be a fake 60 years later because the ink used did not exist before the 1920s.

1 I collected these accounts through a basic cascading effort reviewing the follows and followers of accounts I’d identified as AI-generated, then letting the Instagram algorithm recommend some more. I did not use an AI detector, relying instead on visual and contextual cues as well as self-identification of the accounts. Please reach out if you want to access the raw data.

2 This figure is an overestimate of their actual reach because there will be some follower overlap among the accounts.

3 This paragraph initially read: “To be clear, I don’t think there’s anything wrong with people paying for AI erotica. This newsletter does kink-shame.” Those two things can’t both be true — and I should have included a “not” in the second sentence. Thanks to eagle-eyed readers Ben and Jacob for spotting it immediately!

4 The labels also cycle out of view when the post includes a location and a song.