Google is running ads for undresser services, the world is seeking fact checks about Imane Khelif, and AI blends academic results with "some people's" beliefs about sleep training.

This newsletter is a ~7 minute read and includes 44 links.

TOP STORIES

STILL MONETIZING

Google announced on July 31 new measures against non consensual intimate imagery (NCII). The most significant change is a new Search ranking penalty for sites that “have received a high volume of removals for fake explicit imagery.” This should reduce the reach of sites like MrDeepFakes, which got 60% of its traffic from organic search in June1 and has a symbiotic relationship with AI tools like DeepSwap that fuel the production of deepnudes.

This move is very welcome and I know the teams involved will have worked hard to get it over the finish line.2

There is much more the company can do, however. The sites that distribute NCII are only half of the problem. The “undresser” and “faceswap” websites that generate non consensual nudes are mostly unaffected by this update, because they don’t typically host any of the non consensual deepnudes that their users create.

And these sites are also getting millions of visits from Search:3

Source: SimilarWeb

It is these undressers that are at the center of Sabrina Javellana’s heart-wrenching story. In 2021, Javellana was one of the youngest elected officials in Florida’s history when she discovered a trove of deepnudes of her on 4chan. I urge you to read the New York Times article on how this abuse drove her away from public life.

Google is not yet ready to go after undressers in Search (a mistake, imo). But it has promised to ban all ads to deepfake porn generators.

Unfortunately, it is breaking that promise. Over the past week, I was able to trigger 15 unique ads while searching for nine different queries 4 related to AI undressing. Below are three examples (ads are labeled “sponsored”):

The ads point to ten different apps or websites. As is typical for this ecosystem of AI-powered grifting, these tools offer a variety of text- and image-generation services and it’s hard to tell the extent to which their dominant use cases is always NCII.

This is the case of justdone[.]ai, which appears to have run hundreds of Google Ads. Most of these were for legitimate use cases, but they included a couple advertising an “Undresser ai” (as well as two ads for AI-written obituaries, which have previously gotten Search into trouble).

And deepnudes are clearly a core service for several of the sites I found advertising with Google, including ptool[.]ai, which promises that “our online AI outfit generator lets you remove clothes” and mydreams[.]studio, which has run at least 15 Google ads.

MyDreams suggests female celebrity names like Margot Robbie as “popular tags” and gives users the option to generate images through the porn-only model URPM.

Google is not getting rich off of these websites, nor is it willfully turning a blind eye. These ads ran because of classifier and/or human mistakes and I suspect most will be removed soon after this newsletter is published. But undressers are a known phenomenon and their continued capacity to advertise on Google suggests that teams fighting this abuse vector should be getting more resources (maybe they could be reassigned from teams working on inexistent user needs).

And to be clear, Google is not alone. One of the websites above was running ads on its Telegram channel for FaceHub:AI, an app that is available on Apple’s App Store and promises you can “change the face of any sex videos to your friend, friend’s mother, step-sister or your teacher.” Last week, Context found four other such apps on the App Store advertising on Meta platforms.

BEHIND THE SLOP

Jason Koebler at 404 Media has been on the Facebook AI slop beat longer than most (🫡, Jason). On Tuesday, he dropped a must-read deep dive on the influencers teaching others how to make money flooding Facebook pages with crappy images generated on Bing Image Creator with prompts like “A African boy create a car with recycle bottle forest.” One slopmaster allegedly made $431 “for a single image of an AI-generated train made of leaves.”

I think this article is to AI slop what Craig Silverman’s piece on Macedonian teens was to fake news in 2016, because it reveals that behind the wasteland of Facebook’s Feed is a motley crew of enterprising go-getters making algorithm-pleasing low-quality content in an attempt to make an easy buck.

IMANE’S MEANING

The controversy over Olympian Imane Khelif has been widely covered, so I’ll only share three quick things. First, this AP News article about the International Boxing Association, the discredited association that seeded the doubts about her sex and chaotically failed to produce any evidence. Second, the “unacceptable editorial lapse” that led The Boston Globe to misgender Khelif. And finally, a glimmer of hope: “fact” and “fact-checking” were top rising trends related to Google Search queries about the Algerian athlete over the past week, suggesting many folks around the world are just trying to figure out what’s going on:

HOW FACT-CHECKERS USE AI

Tanu Mitra and Robert Wolfe at the University of Washington interviewed 24 fact-checkers for a preprint on the use of generative AI in the fact-checking process. I couldn’t help but think that the use cases described — mostly around classifying or synthesizing large sources of information — are incremental rather than transformative.

DEAD OR ALIVE

For The New York Times, Stuart Thompson monitored 19 X accounts with large followings that recently speculated — or flat out asserted as fact — that US President Joe Biden was dead. Appearing in public was not enough to change most of their views (at least as performed on X).

MISINFO FOLLOWS TRAGEDY

A 17-year old boy murdered three young girls and injured 10 more at a yoga and dance studio in the English town of Southport, near Liverpool. Online misinformation followed the horrific crime, as a Nigerian blog, an anti-lockdown activist and a bogus news outlet helped amplify a false narrative that the culprit was a Muslim man by the name Ali Al Shakat.

As the Institute for Strategic Dialogue notes:

false claims surrounding the attack quickly garnered millions of views online, galvanised by anti-Muslim and anti-migrant activists and promoted by platforms’ recommender systems. Far-right networks – a mix of formal groups and a broader ecosystem of individual actors – used this spike in activity to mobilise online, organising anti-Muslim protests outside the local mosque which later turned violent.

Outside agitators contributed to this discourse: Channel 4 claims that almost half of the social media posts with the terms “Southport” and “Muslim” originated from US-based accounts, eclipsing the 30% who were based in the UK.

The judge assigned to the case ultimately decided to release the name of the accused because the “idiotic rioting” made it of public interest to “dispel misinformation.”

BABY B.S.

Parents of infants are easy targets of misinformation because they are always tired and hyper-vigilant for any suggestion that something they’re doing will harm their delicate blob of flesh. That’s the context I had in mind when reading this analysis by The Pudding on the discrepancy between the academic literature on sleep training (which basically says it’s OK) and the online conversation (which is bitterly divided).

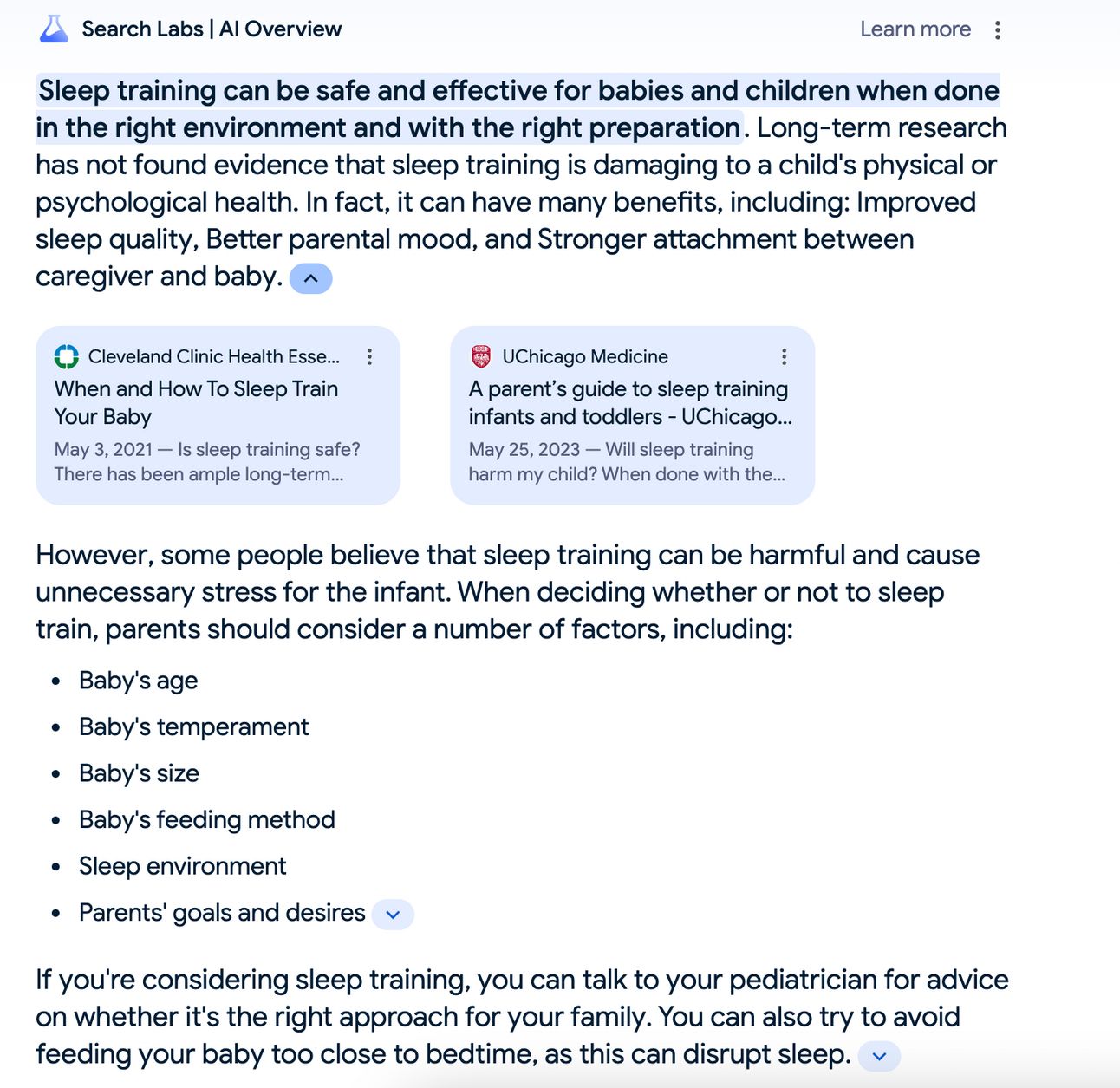

I googled [is sleep training safe] btw, and got the same pureed results experience that I did when I reviewed looksmaxxing queries for FU#4. The AI Overview opens with the expert opinions before adding that “some people believe sleep training can be harmful.” Who are these people and why are they getting the same attention as long-term research??

SPAMOUFLAGING MARCOS

The Australian Strategic Policy Institute claims "there is strong evidence” that the Chinese government is trying to amplify a deepfake video of Filipino president Ferdinand Marcos Jr. doing cocaine:

ASPI has identified at least 80 inauthentic accounts on X that have reshared the deepfake video of Marcos, which we assess as likely linked to Spamouflage, a covert social media network operated by China’s Ministry of Public Security. For example, one account named Lisa Carter shared the Marcos deepfake video with the text ‘#Marcos,Drug Abuser’. (The comma, subtly different to an English comma, is a double-byte character commonly used in East Asian language fonts, such as Chinese, Japanese and Korean.) The same account posted images about Guo Wengui, a Chinese businessman who has been targeted in previous Spamouflage campaigns. Other Spamouflage accounts are reposting the video, claiming it is real and, in replies to legitimate accounts’ posts of the video, for a drug test.

DENIAL KEEPS FLOWING

Worrying Rolling Stone finding:

In the swing states of Arizona, Georgia, Michigan, Nevada, North Carolina, and Pennsylvania, Rolling Stone and American Doom identified nearly 70 pro-Trump election conspiracists currently working as county election officials who have questioned the validity of elections or delayed or refused to certify results. At least 20 of these county election officials have refused or delayed certification in recent years.

Some of these officials are already dragging their feet on certifications: in Nevada, a Washoe County commissioner voted against certifying her own victory in the Republican primary before changing course when she realized this “is a legal duty and affords no discretionary provision to refuse.” Get ready for November drama.

Headlines

Copyright Office tells Congress: ‘Urgent need’ to outlaw AI-powered impersonation (TechCrunch)

Russia vs Ukraine: the biggest war of the fake news era (Reuters)

States Legislating Against Digital Deception: A Comparative Study of Laws to Mitigate Deepfake Risks in American Political Advertisements (SSRN)

Bot-like accounts on X fuel US political conspiracies, watchdog says (AFP)

Racked by Pain and Enraptured by a Right-Wing Miracle Cure (NYT)

Sen. Cruz’s TAKE IT DOWN Act Clears Commerce Committee (U.S. Senate Commerce Committee)

Five US states push Musk to fix AI chatbot over election misinformation (Reuters)

There’s a Tool to Catch Students Cheating With ChatGPT. OpenAI Hasn’t Released It. (WSJ)

‘This is a Fake Image’: Washington Post Forced to Shut Down Viral Column Hoax (Mediaite)

Quantifying the vulnerabilities of the online public square to adversarial manipulation tactics (PNAS Nexus)

How covid conspiracies led to an alarming resurgence in AIDS denialism (MIT Technology Review)

Faked Map

I’ve decided to replace the “Before you go” section with something a little different. Each week, I’ll share a map we now know to be very wrong.

This week, I’ll start with one of my faves: California as an island. Stanford has digitized a collection of nearly 750 maps that over the years separated the state from the rest of the American continent with a big moat. Here’s one from 1678 that lets California hang free:

1 Data from Similar Web. Some of these queries are clearly navigational (i.e. people type mrdeepfakes in their browser and click on the link in Google rather than typing the url)

2 In some cases, I know and respect these people personally as former colleagues.

3 Incidentally, Google’s YouTube also drives traffic to these websites as the top social traffic referrer, per SimilarWeb data. Social traffic is a much smaller share of overall traffic, though, which is why this information is in a footnote.

4 Here’s the full list: