Deepfake videos that showed former special counsel Jack Smith warning of impending legal peril for Donald Trump, FBI Director Kash Patel, and other MAGA figures recently generated more than 1 million views on YouTube.

The videos, which were published across two channels, depicted a synthetic Smith sitting at a desk and reciting a script for roughly 10 to 15 minutes. They had titles like “Kash Patel FREEZES As Judge PLAYS FBI Wiretaps | Jack Smith” and “Is Mar-a-Lago taken by Federal to Cover Judgements?”

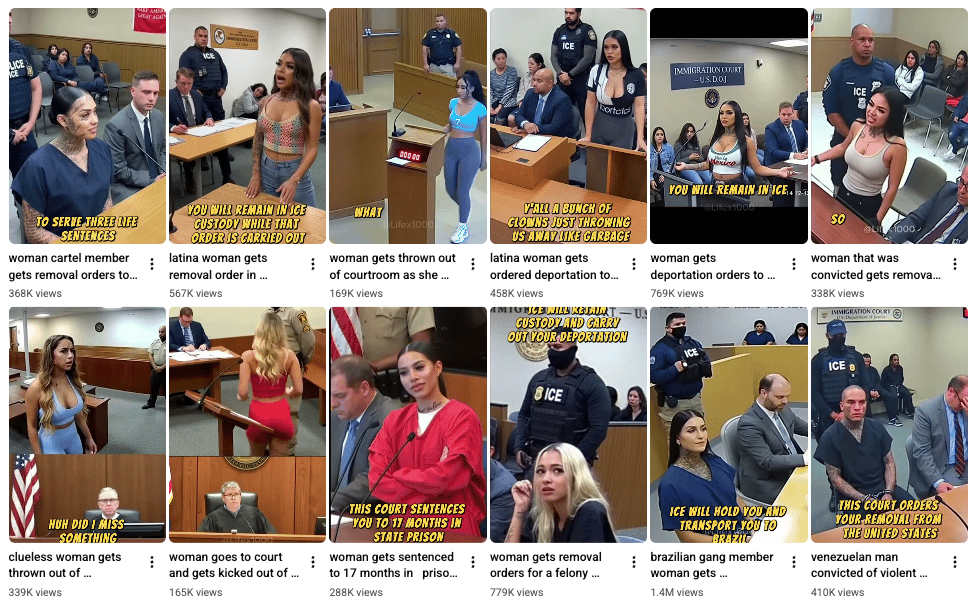

The Smith deepfake channels were part of a thriving YouTube niche of AI slop focused on courts, legal issues, and law enforcement. Indicator identified 24 channels that generated over 1.7 billion views and that exclusively or primarily post AI-generated legal, courtroom or bodycam content. (A channel called Courtroom Consequences has over 800 million views but was excluded from the views total because it only recently began posting legal slop.)

The channels have names like CourtDramasTv, InjusticeShorts, and Judicial Saga. They typically posted fabricated courtroom scenes or synthetic bodycam or CCTV footage designed to ragebait viewers or exploit sympathy. Some mixed real footage and AI voice narration, or alternated between AI slop and real bodycam or courtroom footage, further complicating things for viewers.

The channels illustrate how slop entrepreneurs identify popular content niches — such as courtroom and bodycam footage — and fill them with AI-generated copycats.

Some of the videos displayed a visible "Altered or synthetic content” label, which YouTube applies to what it classifies as “sensitive” content. But multiple channels didn’t use or display AI labels, inconsistently applied them, or inaccurately labeled AI content as being “Auto-dubbed,” according to Indicator’s review.

Join Indicator to read the rest

“I’m a bit more at peace knowing there are people out there doing some really heavy lifting here - investigating, exposing, and helping us understand what’s happening in the wild. And the rate & depth at which they're doing it..." — Cassie Coccaro, head of communications, Thorn

Upgrade nowJoin Indicator to get access to:

- All of our reporting

- All of our guides

- Our monthly workshops